the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Bright: a globally distributed multimodal building damage assessment dataset with very-high-resolution for all-weather disaster response

Hongruixuan Chen

Jian Song

Olivier Dietrich

Clifford Broni-Bediako

Weihao Xuan

Junjue Wang

Xinlei Shao

Yimin Wei

Junshi Xia

Cuiling Lan

Konrad Schindler

Naoto Yokoya

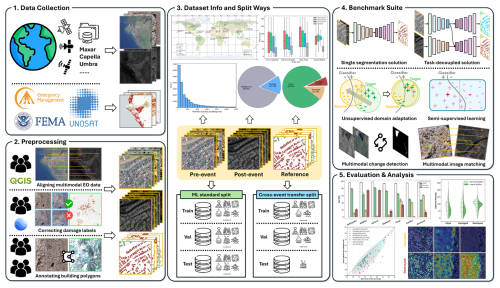

Disaster events occur around the world and cause significant damage to human life and property. Earth observation (EO) data enables rapid and comprehensive building damage assessment, an essential capability crucial in the aftermath of a disaster to reduce human casualties and inform disaster relief efforts. Recent research focuses on developing artificial intelligence (AI) models to accurately map unseen disaster events, mostly using optical EO data. These solutions based on optical data are limited to clear skies and daylight hours, preventing a prompt response to disasters. Integrating multimodal EO data, particularly combining optical and synthetic aperture radar (SAR) imagery, makes it possible to provide all-weather, day-and-night disaster responses. Despite this potential, the lack of suitable benchmark datasets has constrained the development of robust multimodal AI models. In this paper, we present a Building damage assessment dataset using veRy-hIGH-resoluTion optical and SAR imagery (Bright) to support AI-based all-weather disaster response. To the best of our knowledge, Bright is the first open-access, globally distributed, event-diverse multimodal dataset specifically curated to support AI-based disaster response. It covers five types of natural disasters and two types of human-made disasters across 14 regions worldwide, focusing on developing countries where external assistance is most needed. The dataset's optical and SAR images with spatial resolutions between 0.3 and 1 m provide detailed representations of individual buildings, making it ideal for precise damage assessment. We train seven advanced AI models on Bright to validate transferability and robustness. Beyond that, it also serves as a challenging benchmark for a variety of tasks in real-world disaster scenarios, including unsupervised domain adaptation, semi-supervised learning, unsupervised multimodal change detection, and unsupervised multimodal image matching. The experimental results serve as baselines to inspire future research and model development. The dataset (https://doi.org/10.5281/zenodo.14619797, Chen et al., 2025a), along with the code and pretrained models, is available at https://github.com/ChenHongruixuan/BRIGHT (last access: 7 November 2025) and will be updated as and when a new disaster data is available. Bright also serves as the official dataset for the 2025 IEEE GRSS Data Fusion Contest Track II. We hope that this effort will promote the development of AI-driven methods in support of people in disaster-affected areas.

- Article

(17049 KB) - Full-text XML

- BibTeX

- EndNote

A disaster is defined as a severe disruption in the functioning of a community or society due to the interaction between a hazard event and the conditions of exposure, vulnerability and capacity resulting in human, material, economic or environmental losses and impacts (Ge et al., 2020). According to the United Nations Office for Disaster Risk Reduction (UNDRR), between 1998 and 2017, natural disasters such as earthquakes, storms, and floods affected approximately 4.4 billion people and caused 1.3 million deaths. These disasters have also resulted in economic losses of 2647 billion United States dollars (USD) in disaster-affected countries (UNDRR, 2018a). The threat of disasters is likely to increase due to global urbanization (Kreibich et al., 2022; Bastos Moroz and Thieken, 2024). Rapid and comprehensive damage assessment is crucial in the aftermath of a disaster to make informed and effective rescue decisions that minimize losses and impacts. Building damage assessment aims to provide information, including the area and amount of damage, the rate of collapsed buildings, and the type of damage each building has incurred. This information is critical in the early stages of a disaster, as the distribution of damaged buildings is closely related to life-saving efforts in an emergency response (Xie et al., 2016; Adriano et al., 2021). Conducting field surveys after a disaster can be difficult and dangerous, especially when transportation and communication systems are disrupted, making efficient on-site assessments challenging. Earth observation (EO) provides a safe and efficient way to obtain information on building damage in disaster areas due to its wide field of view and contactless operation.

The EO technologies commonly used for assessing building damage after disasters are optical and synthetic aperture radar (SAR). Optical imagery is a primary source for building damage assessment because of its intuitive and easy-to-interpret nature. For example, moderate-resolution optical data from the Landsat series and Sentinel-2 have been used to assess building damage (Yusuf et al., 2001; Fan et al., 2019; Sandhini Putri et al., 2022). Landsat and Sentinel-2 data are limited in spatial resolution and only provide broad approximations of affected areas, which lack precision for specific buildings, crucial for timely rescue. The new generation of very high-resolution (VHR) optical sensors, such as IKONOS and WorldView, provides EO data with spatial resolutions of a meter or less, enabling finer assessments at the level of individual buildings (Freire et al., 2014). These data have been used successfully in building damage assessment (Yamazaki and Matsuoka, 2007; Tong et al., 2012; Freire et al., 2014).

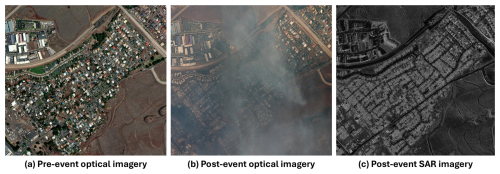

Figure 1An example of the wildfire occurring in Maui, Hawaii, USA, August 2023. (a) Pre-event optical imagery (© Maxar). (b) Post-event optical image (© Maxar) with land-cover features obscured by wildfire smoke. (c) Post-event SAR imagery (© Capella Space) unaffected by smoke, showing the disaster area.

While accurate building damage maps can be obtained by visual interpretation of optical images by human experts, this process is time-consuming and labor-intensive for large-scale rapid assessments. In addition, it requires trained professionals. Therefore, recent studies have focused on developing automated methods for rapid building damage mapping (Tong et al., 2012; Xie et al., 2016; Gupta et al., 2019; Zheng et al., 2021). Among these, machine learning (ML) and deep learning (DL) techniques have significantly improved efficiency and accuracy in building damage assessment. Earlier work focused on a single disaster event with labels annotated for a specific disaster area to train a model. This model is then used to generate building damage maps for the same event (Xie et al., 2016; Xia et al., 2023). However, since training data were limited to a few building types, damage patterns, and background land cover distributions, the resulting models mostly lack generalizability and struggle to produce accurate building damage maps for new disaster events, which limits their practical use. Recent large-scale benchmark datasets, for example, the xBD dataset (Gupta et al., 2019) containing different types of disaster scenarios and damages, have made it possible to adopt DL models to quickly and accurately map building damages after a newly occurred, previously unseen disaster (Zheng et al., 2021; Chen et al., 2022a; Shen et al., 2022; Kaur et al., 2023; Guo et al., 2024; Wang et al., 2024; Chen et al., 2024). For example, Zheng et al. (2021) trained DL models on the xBD dataset and applied them to map the damage to buildings in two unseen human-made disaster events. These studies have demonstrated the effectiveness of DL models for building damage mapping.

The optical EO technology uses a passive sensing technique, which requires solar illumination and cloud-free weather conditions. This severely limits the application of optical images in an emergency tool for all-weather disaster response (Adriano et al., 2021). In contrast, SAR sensors employ active illumination with longer microwaves and can acquire images in adverse weather conditions, offering great potential for all-weather disaster response. Most disaster events, especially wildfires, floods, and storms, are often accompanied by less-than-ideal imaging conditions. For example, Fig. 1 shows EO imagery captured for a wildfire event that occurred in August 2023 in Hawaii, USA. The post-event optical image shown in Fig. 1b does not provide clear surface information due to the effects of the wildfire smoke. However, the SAR image illustrated in Fig. 1c is not affected by smoke and clearly shows the buildings damaged by the wildfire.

Due to the advantages of SAR imagery, various SAR-based methods have been proposed for building damage assessment. These methods utilize intensity (Matsuoka and Yamazaki, 2005, 2010; Matsuoka et al., 2010), coherence (Yonezawa and Takeuchi, 2001; Arciniegas et al., 2007; Watanabe et al., 2016; Liu and Yamazaki, 2017), and polarization features (Yamaguchi, 2012; Chen and Sato, 2013; Watanabe et al., 2016; Karimzadeh and Mastuoka, 2017) to assess building damage at a block unit level, depending on the acquisition mode. Several studies have attempted to extend the block-level approach and have explored new approaches at the building instance level using higher spatial resolution sensors such as COSMO-SkyMed and TerraSAR-X (Liu et al., 2013; Brett and Guida, 2013; Chini et al., 2015; Ge et al., 2019). DL-based methods have also been explored with SAR data to assess building damage (Bai et al., 2018; Adriano et al., 2019; Bai et al., 2017; Li et al., 2023b). However, because of the lack of large-scale benchmark datasets, such as xBD in the optical domain, these methods have focused on local regions and single disaster events, and their ability to generalize to other disaster events remains largely unknown.

The inherent challenges of SAR data, such as oblique viewing angles, speckle noise, object occlusion, and geometric distortions, complicate the accurate mapping of building damage compared to optical imagery (Adriano et al., 2021; Xia et al., 2025). Furthermore, the limited availability of the VHR SAR data reduces its reliability as a source of pre-event data (Brunner et al., 2010; Adriano et al., 2021). Considering these practical limitations, the most effective strategy for rapid assessment of building damage in all weather could arguably be to combine pre-event optical images, which provide accurate localization and detailed building information in the visible spectrum, with post-event SAR images, which capture structural information as a cue for building damage (Adriano et al., 2019). Previous methods have attempted to align the two modalities with traditional statistical models (Stramondo et al., 2006; Chini et al., 2009; Brunner et al., 2010; Wang and Jin, 2012). These statistical models are sensor-specific and require dedicated modeling for each sensor. DL methods offer a promising solution by automatically learning a high-dimensional feature space that aligns the two modalities. However, to train a DL model, one must have access to a high-quality, large-scale dataset with comprehensive coverage of various disaster events and sufficient geographic diversity. This remains a significant challenge that needs to be addressed.

To support AI-based research aimed at all-weather building damage mapping, we present Bright, the first open and globally distributed multimodal VHR dataset for building damage assessment. Advances in EO technology have enabled data providers like Capella (https://www.capellaspace.com/, last access: 7 November 2025) and Umbra (https://umbra.space/, last access: 7 November 2025) to offer VHR SAR imagery at a sub-meter level resolution per pixel. This allows for detailed building damage assessments at the individual building level, to guide targeted and effective rescue operations as required by emergency responders. Benefiting from the progress made in EO, Bright incorporates both pre-event optical imagery and post-event SAR imagery with spatial resolutions ranging from 0.3 to 1 m pixel−1. The types of disaster events considered in Bright are earthquakes, storms (e.g., hurricane, cyclone), wildfires, floods, and volcanic eruptions. These natural disasters accounted for 84 % of the fatalities and 94 % of the economic losses between 1998 and 2017 (UNDRR, 2018a). In addition to natural disasters, the Bright dataset further considers disasters caused by human activity, such as accidental explosions and armed conflicts, which also pose significant threats to human life and infrastructure and can occur unexpectedly, requiring a rapid response (UNDRR, 2018b; Dietrich et al., 2025). The 14 disaster events cover 23 different regions distributed around the globe, with a focus on developing countries where external assistance is most urgently needed after a disaster. The labels are manually annotated with multi-level annotations that distinguish between damaged buildings and completely destroyed buildings.

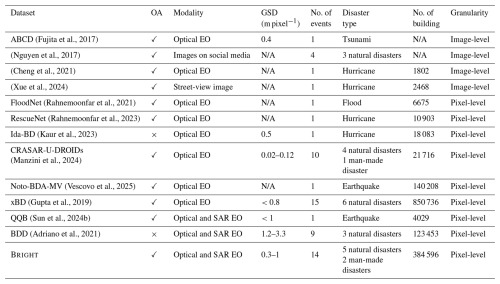

(Fujita et al., 2017)(Nguyen et al., 2017)(Cheng et al., 2021)(Xue et al., 2024)(Rahnemoonfar et al., 2021)(Rahnemoonfar et al., 2023)(Kaur et al., 2023)(Manzini et al., 2024)(Vescovo et al., 2025)(Gupta et al., 2019)(Sun et al., 2024b)(Adriano et al., 2021)Table 1Comparison of Bright with the existing building damage assessment datasets. The OA indicates whether the dataset is open access (OA) or not, and GSD is an acronym for ground sampling distance (GSD). Note that since some datasets integrate other datasets, we summarize only the largest one to avoid duplication here. For example, the BDD dataset (Adriano et al., 2021) includes the Tohoku-Earthquake-2011 dataset (Bai et al., 2018) and Palu-Tsunami-2018 dataset (Adriano et al., 2019). N/A = not available.

1.1 Comparison with existing datasets

The comparison between Bright and existing datasets for building damage assessment is summarized in Table 1. Most current building damage assessment datasets are limited in scale and scope due to the limited availability of disaster events with corresponding open-source EO data and annotation efforts (Rahnemoonfar et al., 2021; Gupta and Shah, 2021; Kaur et al., 2023). Because of the high cost and time required for pixel-level labeling, some of the existing datasets provide image-level labeling, indicating only whether an image contains damaged buildings (Fujita et al., 2017; Nguyen et al., 2017; Cheng et al., 2021; Xue et al., 2024). Although these image-level labeling datasets have served the community well, they lack the spatial precision needed to guide specific rescue operations. The xBD dataset (Gupta et al., 2019) is currently the largest open data collection, covering six natural disasters in 15 regions with more than 700 000 building instances. However, the xBD includes only optical EO data. It does not support all-weather disaster response. Sun et al. (2024b) introduced a multimodal dataset, but it is limited to a single disaster event and contains only about 4000 building instances. The small size makes it challenging to train DL models and limits the transferability of the trained models.

The dataset most similar to Bright is the BDD proposed by Adriano et al. (2021). The main differences between BDD and Bright datasets are: (1) Bright covers more disaster events and building instances, including both natural and human-made disasters. (2) Bright has higher spatial resolution in both optical and SAR images. Whereas the highest resolution of SAR images in BDD is 1.2 m, Bright provides finer detail with spatial resolutions ranging from 0.3 to 1 m, enabling the detection of subtle structural damage in individual buildings. (3) Perhaps the most important difference is that whereas the re-distribution of BDD is restricted, Bright is an open-source dataset publicly available to the global community. Apart from the datasets listed in Table 1, there are other datasets targeted at monitoring hazardous events related to disasters, including landslides (Ghorbanzadeh et al., 2022; Meena et al., 2023), floods (Bonafilia et al., 2020; Zhang et al., 2023) and wildfires (Artés et al., 2019; Huot et al., 2022; He et al., 2024), but are not related to building damage assessment.

1.2 Main contribution

The contributions of this paper are threefold:

-

We present Bright, the first multimodal building damage dataset with sub-meter spatial resolution, which is publicly available to the community. Bright employs a combination of pre-event optical imagery and post-event SAR imagery, with various disaster events and rich geographic diversity, to support the study of AI-based multimodal building damage mapping, especially in developing countries.

-

We evaluate a suite of contemporary models on Bright to establish robust baselines. Beyond supervised deep learning, Bright can support a wide range of AI-based methods. It enables research in unsupervised domain adaptation (UDA), semi-supervised learning (SSL), unsupervised multimodal change detection (UMCD), and unsupervised multimodal image matching (UMIM), among others. To demonstrate its utility, we benchmark a suite of representative models across several of these tasks. All experimental results, along with the source code and pretrained weights, are publicly released to provide strong baselines and accelerate future developments in disaster response in the community.

-

We provide an in-depth analysis that uncovers key challenges and mechanisms of the multimodal building damage assessment task. Through carefully designed experiments, we reveal the difficulties of cross-event generalization, investigate the role of pre-event optical data in aiding damage classification, and quantify the performance gaps between different post-event modalities. These findings offer valuable insights for the development of more robust and practical models for disaster response.

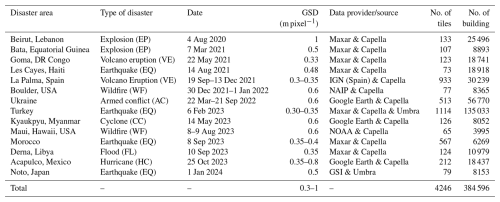

Table 2Summary of basic information of the Bright dataset with disaster events listed in chronological order. GSI refers to the Geospatial Information Authority of Japan, and IGN refers to the Instituto Geográfico Nacional (National Geographic Institute) of Spain.

Figure 3Overall flowchart of developing the Bright dataset. Logos/seals are shown solely for identification and scholarly illustration; no endorsement is implied. Copernicus Emergency Management Service logo © European Union (Copernicus Programme); UNOSAT logo © UNITAR/UNOSAT; FEMA seal: public-domain work of the U.S. federal government; QGIS logo © QGIS.org Association; Google Earth logo © Google LLC. All rights reserved by their respective owners.

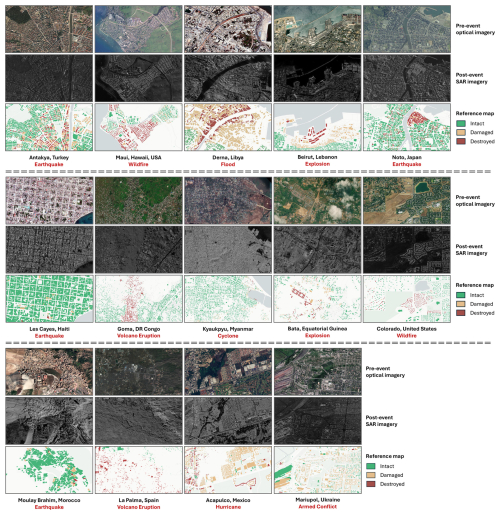

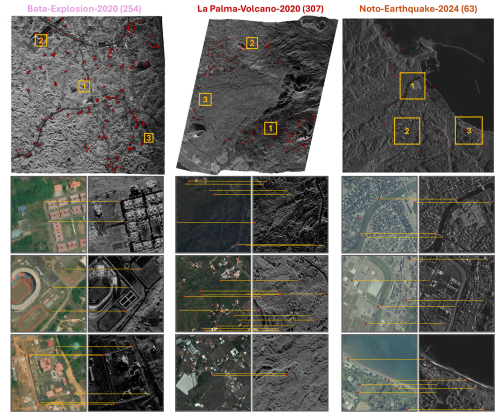

Figure 4Thumbnails of local areas in 14 disaster events in the Bright dataset. The sources of EO images are illustrated in Table 2. For visualization purposes, different events have different scales.

2.1 Study areas and disaster events

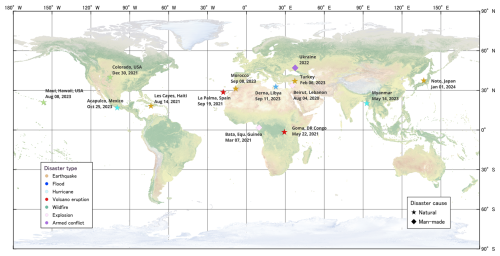

We selected 14 disaster events across the globe for Bright, as illustrated in Fig. 2 and Table 2. Since both Capella Space and Umbra satellites were launched in 2020, we focused on study areas where disasters have occurred since then. The selected regions are primarily in developing countries, where public administration and disaster response capacities tend to be weaker compared to those in developed nations, making international assistance more critical. The dataset covers five major types of natural disasters: earthquakes, storms (including hurricanes and cyclones), wildfires, floods, and volcanic eruptions. Additionally, it includes human-made disasters, such as accidental explosions and armed conflicts. Detailed descriptions of the 14 disaster events are provided in Appendix A.

2.2 Construction of Bright

Figure 3 shows the flowchart of developing Bright. The optical EO data in the dataset are mainly from Maxar's Open Data program (https://www.maxar.com/open-data, last access: 7 November 2025), while the SAR EO data are from Capella Space (https://www.capellaspace.com/earth-observation/gallery, last access: 7 November 2025) and Umbra (https://umbra.space/open-data/, last access: 7 November 2025). Both Capella and Umbra data have two imaging modalities, i.e., Spotlight and Stripmap, respectively. The Spotlight mode has a higher spatial resolution but less coverage. In the region of interest, we preferred Spotlight mode if suitable data was available in the data provider's inventory. Otherwise, we chose Stripmap. The optical EO data consists of red, blue, and green bands, while the SAR EO data consists of amplitude data in the VV or HH bands. For optical EO data, the digital number was converted to reflectance and then standardized to an 8-bit data format. For SAR imagery, after the data had been terrain-corrected, we utilized the pre-processed 8-bit data when available. In cases where 8-bit data was not provided, we employed the data provider's recommended method (https://support.capellaspace.com/scaling-geo-images-in-qgis, last access: 7 November 2025) to convert the amplitude data. Although both optical and SAR images are geocoded, there are still pixel offsets between them. Therefore, multiple EO experts manually aligned the paired optical and SAR data and cross-checked their results to ensure the precise registration between the two modalities. Figure C1 in Appendix C shows the selected control points on three disaster scenes.

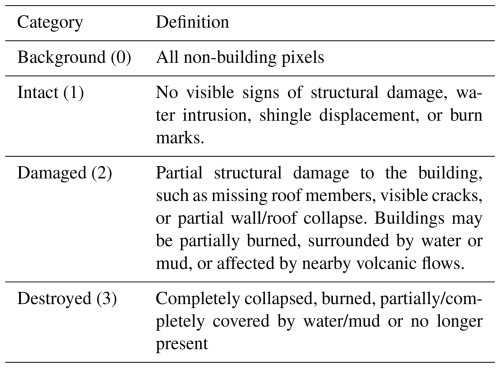

The labels in Bright consist of two components: building polygons and post-disaster building damage attributes. Expert annotators manually labeled the building polygons, then all labels underwent independent visual inspections of EO experts to ensure accuracy. Damage annotations were obtained from Copernicus Emergency Management Service (https://emergency.copernicus.eu, last access: 7 November 2025), the United Nations Satellite Centre (UNOSAT) Emergency Mapping Products (https://unosat.org/products, last access: 7 November 2025), and the Federal Emergency Management Agency (FEMA) (https://www.fema.gov, last access: 7 November 2025). These annotations were derived through visual interpretation of high-resolution optical imagery captured before and after the disasters by EO experts, supplemented by partial field visits. To harmonize these diverse annotations and ensure consistency across all 14 disaster events, we implemented a rigorous, multi-stage process. First, we established a single, standardized three-tier classification scheme, including Intact (with pixel value 1), Damaged (with pixel value 2), and Destroyed (with pixel value 3), with clear definitions provided in Table 3, drawing on the frameworks of FEMA's Damage Assessment Operations Manual, EMS-98, the BDD dataset (Adriano et al., 2021), and the xBD dataset (Gupta et al., 2019). While the source agencies' terminology can differ (e.g., “Severe Damage” vs. “Major Damage”), their underlying definitions for EO-based assessment are conceptually consistent. We leveraged this alignment for an initial rule-based mapping, where various intermediate damage tiers were conservatively aggregated into our single “Damaged” category. Second, our team of EO experts conducted a comprehensive manual verification and refinement of every annotation using multi-temporal VHR imagery on platforms like Google Earth Pro. This final stage served as the ultimate guarantor of consistency. We paid special attention to ambiguous source labels, such as “Possibly Damaged”. Adopting a conservative approach, these were re-classified as “Intact” if clear structural damage was not evident, thereby ensuring a high-confidence “Damaged” class. We also manually disaggregated all area-based annotations (i.e., where an entire block was assigned a single category). We re-processed these to assign a precise, building-wise damage label to each individual structure, ensuring instance-level consistency and granularity across the entire dataset. The damage annotations were provided as vector point files. The final building damage labels were generated by overlaying these points withd the building polygons and assigning corresponding damage attributes. To prevent geographic misallocation due to possible coordinate offsets, the coordinate systems of the points and polygons were unified, with a visual inspection performed prior to the final allocation. Figure 4 presents thumbnails of selected local areas from the 14 disaster events.

2.3 Statistics of Bright

The basic information about Bright, including disaster events, EO data, the number of corresponding EO tiles, and the total number of building pixels, is summarized in Table 2. After cropping the EO data into 1024 × 1024-pixel tiles, Bright contains 4246 multimodal image pairs.

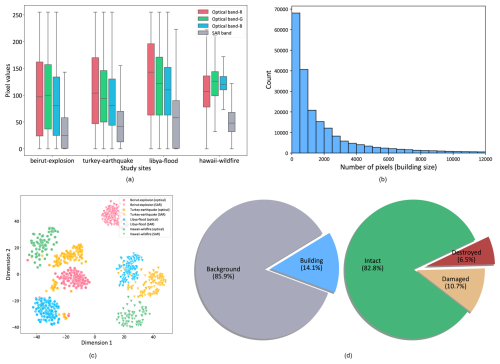

Figure 5Statistics of the Bright dataset. (a) Distributions of band values of samples from four study sites. (b) Distribution of building scales. (c) Feature distribution of buildings of four events under two imaging modalities. (d) Percentage of building and background pixels and percentage of different damage levels in building pixels.

The key statistics of Bright are illustrated in Fig. 5. Figure 5a shows the pixel value distribution for optical and SAR images from one human-made disaster and three natural disasters. The varying geographical landscapes and land cover across different regions result in distinct means and standard deviations of pixel values. This highlights Bright's geographical diversity, which makes it a robust dataset for studying building damage assessment in diverse environments. To ensure that models trained on Bright can accurately detect buildings and assess damage levels, it is crucial that the dataset includes a wide variety of building styles from different regions. Figure 5b shows that Bright covers buildings at multiple scales, exhibiting a “long-tail” distribution. This multi-scale representation challenges DL models to develop the ability to capture features at varying scales, enhancing robustness and accuracy.

Figure 5c further illustrates the feature distribution of buildings in the optical and SAR images for the four events shown in Fig. 5a, which demonstrates clear inter-event separability in both modalities. Bright also faces a significant challenge of sample imbalance, as shown in Fig. 5d. There is a notable imbalance between background pixels and foreground (building) pixels, with a ratio of approximately 7:1. The imbalance exists within the damage categories: about 6.5 % of building pixels represent destroyed buildings, 10.7 % correspond to damaged buildings, and 82.8 % are intact buildings. This imbalance can complicate model training, necessitating careful strategies to develop robust DL models.

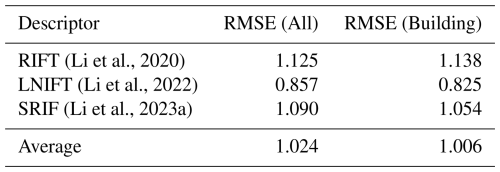

(Li et al., 2020)(Li et al., 2022)(Li et al., 2023a)Table 4Proxy registration errors (in pixel) estimated using different multimodal image descriptors. The estimated registration errors for each event are reported in Table C1 in Appendix C.

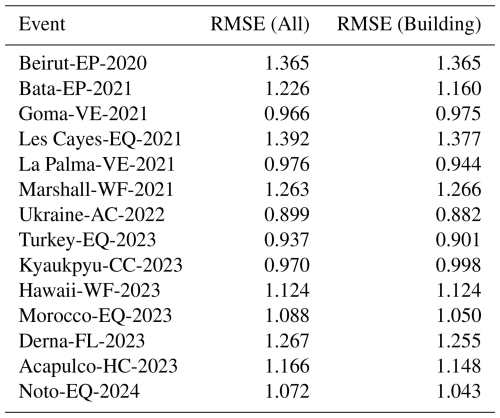

Moreover, since accurate registration ensures spatial consistency across modalities, the registration accuracy between optical and SAR EO data in Bright was analyzed to provide a solid foundation for accurate building damage assessment. Due to the absence of real ground truth, a proxy method that leverages existing multimodal image descriptors was introduced to estimate registration errors. This approach is detailed in Appendix C. The Table 4 reports the mean registration errors, measured as the root mean square error (RMSE) of pixel displacements. It was obtained using three representative multimodal image registration methods: RIFT (Li et al., 2020), SRIF (Li et al., 2023a), and LNIFT (Li et al., 2022). The overall average RMSE is approximately 1.024 pixels, with a lower error of 1.006 pixels specifically within the building regions.

2.4 Dataset splitting strategy

To train DL models using Bright and evaluate their generalizability, it is necessary to split the dataset into a training set, validation set, and test set. Gerard et al. (2024) suggested that dividing the dataset on an event-by-event basis, rather than randomly across the entire dataset, provides a more accurate reflection of a model's generalizability. Therefore, for the 14 events listed in Table 2, we divide the corresponding data for each event into a ratio of for training, validation, and test subsets, respectively. Then, the subsets obtained for each event are merged to create the final training, validation, and test sets. In the experiments, the baseline models are trained using the training set, and the optimal hyperparameters (e.g., learning rate) and checkpoints are selected based on performance on the validation set. The generalization capability of the baseline models is subsequently evaluated on the test set.

In addition to the above standard ML data splitting, we also introduce a cross-event transfer setup to better evaluate the ability of models to generalize across disaster events. This is a critical challenge in real-world applications where models are expected to handle unseen disaster types and locations. Two setups are established for cross-event transfer generalization:

-

Zero-shot setup: This setting mimics a real-world scenario where a newly occurring disaster must be analyzed without any prior labeled data from the same event. We isolate one event as an unseen test set while using the remaining 13 events for training and validation. This setting evaluates the cross-event generalization ability of models, testing how well learned knowledge can be transferred from previous disasters to an entirely new disaster event. Due to the high variability of disaster types and geographies, this setup is inherently challenging, as models trained on past disasters may struggle to assess damage patterns in a previously unseen event accurately.

-

One-shot setup: Recognizing the difficulty of the zero-shot setup, we introduce a one-shot setup. This setting simulates a realistic scenario where a single, representative sample from the new disaster can be quickly labeled to guide model adaptation. In this setting, a limited subset of labeled data (one pair for training and one pair for validation) from the target disaster event is incorporated into the training process. At the same time, the majority of the test set remains unseen. This setup evaluates the model's ability to leverage a minimal amount of manually labeled data to improve disaster-specific adaptation.

It is worth noting that our cross-event transfer setup differs from classic few-shot learning tasks in the computer vision field (Shaban et al., 2017; Wang et al., 2020). Our goal is not to recognize new classes, but to adapt the model's knowledge of existing classes to a new domain, i.e., an unseen disaster event.

3.1 Problem statement

The objective of building damage assessment is to interpret EO data of areas affected by a disaster by generating a building damage map that reflects the extent of damage to buildings. To achieve this, two common approaches are typically employed. One is to directly treat the building damage assessment task as a single semantic segmentation task (Adriano et al., 2021; Gupta and Shah, 2021). In this approach, the pre- and post-event images are taken as inputs of the model, and then the final damage map is directly predicted. This process can be formalized as , where is the pre-event imagery, is the post-event imagery, ℳseg(⋅) is a semantic segmentation model, Ydam is the obtained damage map. In the context of this paper, is VHR optical imagery and is VHR SAR imagery.

The second adopts the task decoupling approach (Gupta et al., 2019; Zheng et al., 2021), which breaks down building damage assessment into two subtasks: the building localization task, i.e., separating the building from the background, and the damage classification task, i.e., focusing on the classification between different levels of damage. This approach can be formulated as and , where Yloc is the building localization map, Yclf is the damage classification map, ℳloc(⋅) and ℳclf(⋅) are models for building localization and damage classification, respectively. ℳloc(⋅) and ℳclf(⋅) can be two separate models (Gupta et al., 2019) or a unified multi-task learning model (Zheng et al., 2021; Chen et al., 2022a, 2024). The final building damage map is obtained by combining the two outputs using a simple mask operation: . Since this work aims not only to provide a large-scale multimodal dataset to support all-weather disaster response, but also to offer insights for designing appropriate methods in future research, both approaches are employed in the experiments to compare their results.

It is worth noting that in this work, we focus on the formulation of building damage assessment as a bi-temporal task, where both pre- and post-event images are used as inputs. This formulation aligns closely with generic change detection tasks, which aim to identify changes between two time points. Conceptually, building damage assessment can be viewed as a specialized “one-to-many” semantic change detection problem (Zheng et al., 2021, 2024; Lu et al., 2024), where the objective is not only to detect whether a change has occurred but also to categorize the type and severity of changes (damages) to buildings. Many existing methods are thus derived from or adapted versions of generic change detection frameworks (Chen et al., 2024; Zheng et al., 2024; Guo et al., 2024).

3.2 Benchmark suites

Several advanced deep network architectures from both the computer vision and EO communities are evaluated on Bright. Since building damage assessment can be considered a specialized semantic segmentation task, we adopted two well-known segmentation networks from the computer vision field: UNet (Ronneberger et al., 2015) and DeepLabV3+ (Chen et al., 2018); and five state-of-the-art networks from the EO community: SiamAttnUNet (Adriano et al., 2021), SiamCRNN (Chen et al., 2020), ChangeOS (Zheng et al., 2021), DamageFormer (Chen et al., 2022a), and ChangeMamba (Chen et al., 2024). These seven networks encompass a broad range of representative DL architectures, including convolutional neural networks (CNNs), recurrent neural networks (RNNs), Transformers, and the more recent Mamba architecture. Among the seven networks, UNet, DeepLabV3+, and SiamAttnUNet adopt the first approach defined in Sect. 3.1, i.e., directly treating building damage assessment as a single semantic segmentation task. In contrast, SiamCRNN, ChangeOS, DamageFormer, and ChangeMamba adopt the second approach by decoupling the task into building localization and damage classification tasks.

Beyond supervised DL models, Bright also enables the evaluation of other learning strategies and methods commonly explored in the EO and computer vision communities:

-

Unsupervised domain adaptation (UDA) methods for the zero-shot transfer setup, enabling models to transfer knowledge across disaster events with no labeled samples from the target event.

-

Semi-supervised learning (SSL) approaches for the one-shot transfer setup, leveraging a small number of labeled samples and the remaining unlabeled samples from new disaster events to refine model adaptation.

-

Unsupervised multimodal change detection (UMCD) methods, which exploit the modality-independent relationship in optical and SAR data to detect land-cover changes without requiring manual annotations.

-

Unsupervised multimodal image matching (UMIM) methods, which aim to learn modality-independent features to enable automatic registration of multimodal data without relying on manual alignment.

3.3 Model training

To train the supervised models, we use a combination of cross-entropy loss and Lovasz softmax loss (Berman et al., 2018). Cross-entropy loss serves as the basic loss function for dense prediction tasks, while Lovasz softmax loss effectively addresses sample imbalance between non-building and building pixels and across different damage levels. For UNet, DeepLabV3+, and SiamAttnUNet, which directly predict damage maps from the input multimodal image pairs, the training loss function is defined as:

For SiamCRNN, ChangeOS, DamageFormer, and ChangeMamba, which decouple building damage assessment into building localization and damage classification subtasks, the training loss function is defined as:

All the models are trained using the AdamW optimizer (Loshchilov and Hutter, 2017) with a learning rate of and a weight decay of . The training process consists of 50 000 iterations, with a batch size of 16. To enhance sample diversity and improve model generalization, we apply several data augmentation techniques, including random flipping, random rotation (in 90° increments), and random cropping. For the zero-shot and one-shot setups, we also test UDA and SSL techniques to better utilize unlabeled and limited target disaster data. The training settings for the UDA, SSL, UMCD, and UMIM approaches are provided in Appendix F, G, H, and I, respectively.

3.4 Accuracy assessment

We adopt overall accuracy (OA), F1 score (F1), and mean intersection over union (mIoU) to evaluate the performance of the models. These are commonly used metrics in building damage assessment (Zheng et al., 2021). Following the setup in previous unimodal building damage assessment studies and the related xView2 Challenge (Gupta et al., 2019), the F1 score is used to assess the performance of the models in the building localization and damage classification subtasks. OA and mIoU are used to measure the overall quality of the building damage map, providing a comprehensive assessment of the models' ability to localize buildings and classify damage levels accurately.

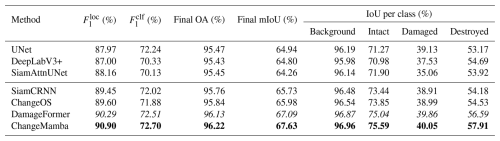

4.1 Evaluation on standard machine learning data split

Table 5 shows the results for each model on the test set. We observe that ChangeMamba achieves the best overall performance, with an OA of 96.22 %, a mIoU of 67.63 %, and the highest and scores of 90.90 % and 72.70 %, respectively. DamageFormer also performs well, following ChangeMamba, with a mIoU of 67.09 % and an OA of 96.13 %. Both models demonstrate a strong capability in the building localization and damage classification tasks. The accuracy of ChangeMamba and DamageFormer underscores the importance of leveraging advanced DL architectures to improve performance in complex tasks such as building damage assessment. For models that use a direct prediction approach (UNet, DeepLabV3+, SiamAttnUNet), UNet achieves the best results, with a mIoU of 64.94 % and an OA of 95.47 %. However, its performance still lags behind the decoupled models, which emphasizes the advantage of task decoupling.

Table 5Accuracy assessment for different DL models on the test set under the standard ML data split (set-level mIoU). The highest values in each column are in bold, and the second-highest values are italicized.

Table 6The mIoU on different events for different DL models (event-level mIoU). The highest values in each event (row) are in bold, and the second-highest values are italicized.

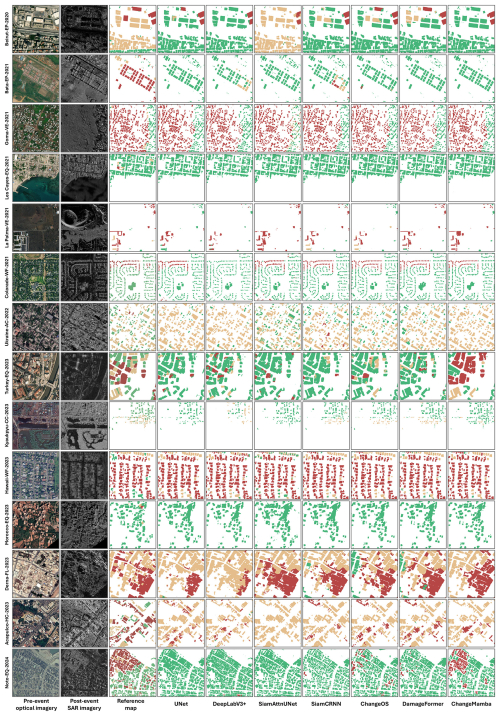

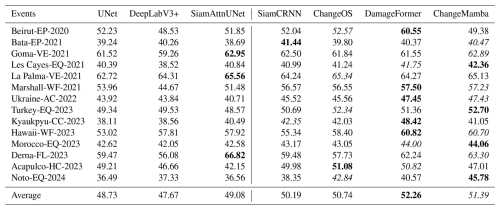

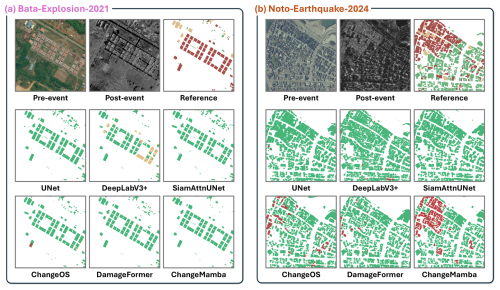

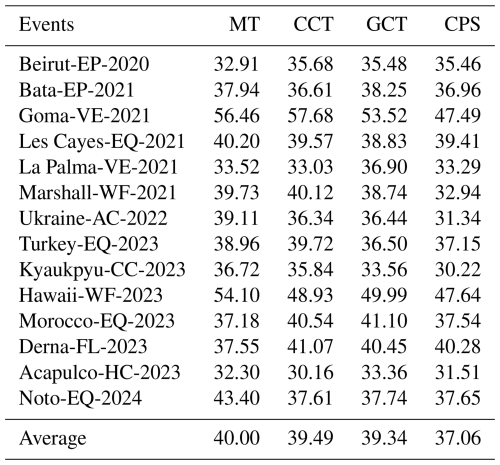

To ensure that the evaluation is not dominated by a few events with a large number of images, e.g., Turkey-EQ-2023, Table 6 presents the event-level mIoU for each model. ChangeMamba and DamageFormer achieve the highest average mIoU, with scores of 51.39 % and 52.26 %, respectively. DamageFormer performs very well on events such as Beirut-EP-2020, Marshall-WF-2021, and Derna-FL-2023. This shows its robustness across different types of disasters. Although performance varies across events, earthquake-related events such as Les Cayes-EQ-2021, Morocco-EQ-2023, and Noto-EQ-2024 present a greater challenge to all models, with a relatively low average mIoU. This highlights the need for further research to improve the robustness of earthquake damage assessment models, particularly where damage patterns are more complex and diverse. Figure 6 shows some building damage maps obtained by the seven models on the test set.

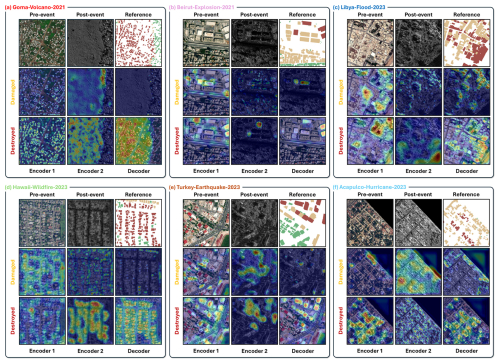

4.2 What have the models learned and what can they learn?

To better understand the models' behavior beyond performance metrics, we explore the internal attention patterns of the trained ChangeOS using class activation maps (CAMs) (Selvaraju et al., 2017). Figure 7 presents the CAM responses of ChangeOS across six representative disaster events: volcano, explosion, flood, wildfire, earthquake, and hurricane. We observe that the attention distribution varies across disaster types. Taking Goma-VE-2021 as an example, for the “Destroyed” category, the encoder exhibits strong activations in nearly all the built-up regions in the optical images, accurately localizing individual buildings. This suggests that the model has effectively learned to extract detailed structural cues from pre-event optical imagery. In contrast, for the SAR images, the encoder shows intense activation over the lava-covered regions on the left. This indicates that the model has identified the lava-covered regions as a key signal for destruction, likely due to the significant backscatter changes caused by lava flow. In the “Damaged” category, the activations are more subtle. Attention is primarily focused near the boundary of the lava flow, where partial or ambiguous structural changes occur. In the decoder, the destroyed buildings in the lava-affected area are strongly activated, which aligns well with the reference labels. Conversely, for the “Damaged” class, only a few regions are activated. This suggests that inferring partial damage from SAR imagery in volcanic disaster scenarios remains a significant challenge, as subtle structural degradation is often not clearly reflected in SAR backscatter or texture.

Figure 7Visualization of feature response to “Damaged” and “Destroyed” categories in different layers of deep models over three event cases. (a) Goma-VE-2021. (b) Beirut-EP-2023. (c) Libya-FL-2023. (d) Hawii-WF-2023. (e) Turkey-EQ-2023. (f) Acapulco-HC-2023. In the visualization, closer to red indicates larger response values, and closer to dark blue the opposite. See Appendix B for implementation details.

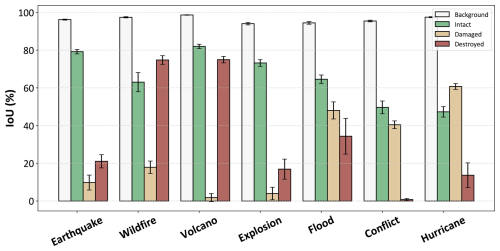

Figure 8IoU distribution of deep models over seven disaster types. Each bar represents the average IoU of seven DL models for that specific category under each disaster type. The error bars indicate the standard deviation of IoU scores across the seven models.

Beyond understanding what the models have already learned, a more critical question is: What can they learn? More specifically, to what extent can optical–SAR modality improve the accuracy of building damage assessment across diverse disaster scenarios? To investigate this, we aggregate the IoUs of the seven models and calculate their average IoU across the seven major disaster types, as shown in Fig. 8. The wildfire and volcano events exhibit the highest IoU scores for the “Destroyed” category, both exceeding 70 %. This indicates that the model can effectively leverage SAR-based backscatter anomalies, such as lava flows or widespread debris fields, to detect fully destroyed structures. These results demonstrate a strong potential of optical–SAR fusion in such high-impact scenarios. For the “Damaged” category, the performance drops significantly. The average IoU for damaged buildings in wildfire events remains below 20 %, while in volcano events, it falls to around 5 %. This suggests that single-polarization SAR imagery lacks the fine-grained information needed to reliably distinguish partially damaged buildings, where structural integrity may still be partially preserved and backscatter signals remain ambiguous. In the earthquake events, both damaged and destroyed categories yield relatively low IoUs. This is likely due to the complex and heterogeneous patterns of structural collapse typical of seismic events, where damage is often subtle, partial, and highly variable. These conditions pose significant challenges for SAR-based assessment. Interestingly, the model achieves relatively high IoU scores in the flood and hurricane events for the “Damaged” category with approximately 50 % and 60 %, respectively. This indicates that SAR effectively captures contextual environmental changes, such as water inundation or terrain disruption, which indirectly aid in assessing building damage. In the case of the conflict event, the model's performance on the “Destroyed” class is surprisingly low. This might be attributed to the limited number of destroyed samples in the dataset for this category, which leads to insufficient learning and poor generalization.

Figure 9Typical failure cases of different models on Bata-Explosion-2021 and Noto-Earthquake-2024 in Bright, where optical images are from © Maxar and © GSI Japan and SAR images are from © Capella Space and © Umbra.

These quantitative limitations are vividly illustrated by the typical failure cases shown in Fig. 9. In the Bata-Explosion-2021 event, models misclassify severely destroyed buildings as intact, reflecting the difficulty of interpreting heterogeneous debris patterns. Similarly, in the Noto-Earthquake-2024 event, large-scale collapses are largely missed, highlighting the challenge of diverse and subtle seismic damage. These examples visually confirm that the significant heterogeneity in damage patterns makes it challenging for models to learn a consistent and generalizable representation of damage.

In summary, these findings confirm both the promise and limitations of optical-SAR modality for all-weather, global-scale disaster response. Although this combination performs well in events characterized by large-scale surface disruption (e.g., wildfires, volcanoes), it struggles with subtle or localized damage patterns. Incorporating richer data sources, such as fully polarimetric SAR and LiDAR data, can further enhance the accuracy and reliability of future all-weather building damage assessments.

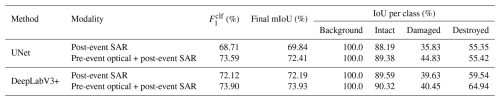

Table 7Performance comparison of UNet and DeepLabV3+ using only post-event SAR input and pre-event optical plus post-event SAR inputs for damage classification task. Here, accurate building masks are provided as the post-processing step to all models to isolate the effect of building localization task on the damage classification task.

4.3 The role of optical pre-event data in multimodal building damage assessment

In the last section, CAM visualizations revealed that DL models also exhibit responses to disaster-specific patterns in pre-event optical imagery. This observation suggests that optical data may play a more complex role in multimodal building damage mapping than simply supporting building localization. In other words, in a multimodal bi-temporal setup, does pre-event optical imagery act solely as a localization aid, or does it provide additional semantic cues that networks can exploit for more accurate damage classification?

To explore this, we conducted controlled experiments using UNet and DeepLabV3+. Both networks were trained under two configurations: (i) using post-event SAR imagery only, and (ii) using multimodal pre- and post-event inputs (optical-SAR). To isolate the contribution of pre-event optical data beyond building localization, we provided perfect building masks for postprocessing in both settings. This design ensures that any observed differences in performance are attributable to the additional information from pre-event optical imagery, rather than differences in network architecture or localization accuracy.

The results, summarized in Table 7, show that incorporating pre-event optical imagery leads to notable improvements in distinguishing building damage levels. For UNet, the IoU for the “Damaged” class increased from 35.83 % (SAR only) to 44.83 % (Optical-SAR), and for the “Destroyed” class from 55.35 % to 55.42 %. DeepLabV3+ exhibited significant gains also, with IoU improvements from 39.63 % to 40.45 % for “Damaged” category, and from 59.54 % to 64.94 % for “Destroyed” category. These results suggest that pre-event optical imagery contributes beyond mere building localization, enriching the feature space for more effective semantic comparison for different building damage levels across modalities.

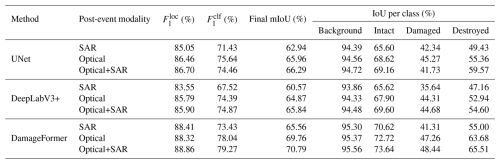

4.4 Impact of post-event modality on building damage assessment performance

Although the primary design of Bright is to facilitate all-weather disaster response through the use of pre-event optical and post-event SAR imagery, it is also important to understand how these modalities compare when high-quality post-event optical imagery is available. To this end, we conducted supplementary experiments on a subset of events, including Bata-Explosion-2020, Beirut-Explosion-2021, Hawaii-Wildfire-2023, Libya-Flood-2023, and Noto-Earthquake-2024, for which pre-processed post-event optical data were accessible. We evaluated three experimental setups: (i) optical-only (pre-event optical + post-event optical), (ii) SAR-only (pre-event optical + post-event SAR, i.e., the standard Bright setting), and (iii) optical + SAR fusion (pre-event optical + post-event optical + post-event SAR).

Table 8Performance comparison of different post-event modalities on a subset of Bright. Results are reported for UNet, DeepLabV3+, and DamageFormer on five disaster events where high-quality post-event optical imagery is available: Bata-Explosion-2020, Beirut-Explosion-2021, Hawaii-Wildfire-2023, Libya-Flood-2023, and Noto-Earthquake-2024.

Table 8 presents the experimental results. As expected, when ideal post-event optical imagery is available, the optical-only setup achieves higher performance than the SAR-only setup. For example, with DamageFormer, the optical-only configuration reaches a final mIoU of 69.76 %, compared to 65.56 % for SAR-only. Importantly, the performance gap between optical and SAR is not substantial, demonstrating that SAR alone provides a strong alternative in the absence of usable optical imagery. Moreover, the fusion of optical and SAR consistently yields the best results across all tested models. For instance, DamageFormer's mIoU further increases to 70.79 % with Optical+SAR fusion, indicating that SAR contributes complementary information that strengthens performance even under optimal optical conditions.

These findings underscore two important insights. First, multimodal fusion is beneficial even when high-quality optical data are available, as SAR provides unique structural information that enriches the optical signal. Second, the performance of the SAR-only approach, being reasonably close to the optical-only results, highlights the practical value of SAR in real-world disaster scenarios where post-event optical imagery is often unavailable. Bright is therefore designed to advance the development of models for these realistic, often non-ideal, but operationally critical all-weather disaster response settings.

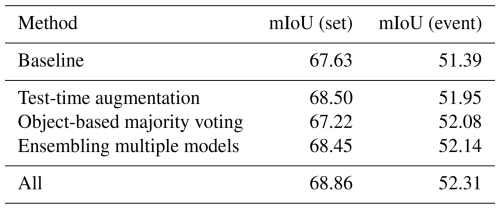

4.5 Effect of post-processing method

Post-processing techniques help refine raw predictions from DL models, to reduce noise, improve consistency, and ensure spatial coherence in damage maps (Zheng et al., 2021). Here, we explore the impact of post-processing algorithms. Table 9 presents the effect of three post-processing techniques applied to ChangeMamba. The post-processing methods evaluated include test-time augmentation, object-based majority voting, and model ensembling. The details of the methods are provided in Appendix E. As shown in Table 9, the test-time augmentation improves the mIoU by 0.87 % at the set level and 0.56 % at the event level, demonstrating its effectiveness in enhancing model robustness across diverse disaster scenarios. Object-based majority voting, which aggregates predictions at the building-object level to enforce spatial consistency, slightly reduces set-level mIoU (−0.41 %) but improves event-level mIoU (+0.69 %). Ensembling multiple models leads to a 0.82 % increase in mIoU at the set level and a 0.75 % increase at the event level, reinforcing its effectiveness in improving model performance across different disaster events. Applying all post-processing techniques together yielded the highest performance improvement, with a 1.23 % increase in set-level mIoU and a 0.92 % increase in event-level mIoU. These results confirm that combining different post-processing methods can significantly enhance the reliability of AI-based damage assessments, ensuring better generalization across disaster types and locations. In summary, post-processing techniques are crucial in improving the accuracy of building damage maps. Future work can further explore adaptive post-processing strategies tailored to specific disaster types to enhance prediction reliability in multimodal EO data contexts.

Table 9Further contributions to mIoU from post-processing algorithms. ChangeMamba (Chen et al., 2024) is used here as the baseline. Details on these algorithms are provided in Appendix E.

4.6 Evaluation on cross-event transfer setup

4.6.1 Baseline methods

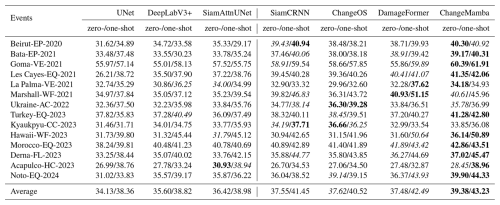

Cross-event transfer, especially under zero-shot settings, poses a significant challenge for building damage assessment. As shown in Table 10, the average mIoU of all baseline models in the zero-shot setting is below 40 %, a noticeable drop compared to their performance under the fully supervised standard ML data split in Table 6, where the models typically achieve 48 % to 52 % mIoU. This performance gap underscores the difficulty of generalizing to unseen disaster events without access to any target domain supervision due to substantial domain shifts in imaging conditions, damage patterns, urban morphology, and sensor response. Despite this, all models exhibit clear performance gains in the one-shot setting, where a small number of labeled samples from the target event are available. This suggests that even minimal supervision can significantly aid adaptation to new disaster contexts.

Among the evaluated models, ChangeMamba consistently achieves the highest overall performance, with an average mIoU of 39.38 % in zero-shot and 43.23 % in one-shot settings, followed by DamageFormer. This highlights the strength of recent Transformer- and Mamba-based architectures in transferring learned knowledge under complex multimodal and disaster scenarios. Models using a decoupled architecture generally outperform direct segmentation models, which confirms that separating building localization and damage classification improves generalization in cross-event transfer tasks. In contrast, the Acapulco-HC-2023 and Ukraine-AC-2022 events show weaker results across all models, reflecting the difficulty in transferring to domains with limited or inconsistent destruction patterns.

4.6.2 Unsupervised domain adaptation and semi-supervised learning methods

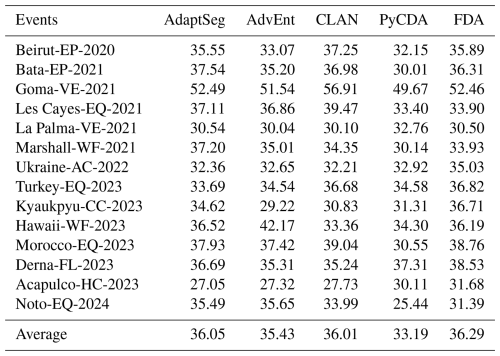

In the context of cross-event transfer, the UDA and SSL methods naturally emerge as promising strategies to bridge the domain gap between the source and the target disaster events. In the zero-shot setting, UDA methods aim to improve model generalization by aligning the source and target domains without requiring any target labels. In the one-shot setting, SSL methods leverage a small number of labeled samples with abundant unlabeled data from the target event, making them especially appealing for real-world disaster scenarios where rapid and comprehensive annotation is infeasible. It remains an open question whether these UDA and SSL methods, originally developed for natural image domains, can effectively handle the challenges of multimodal EO data in complex disaster scenarios. We evaluated several representative UDA and SSL methods using DeepLabV3+ as the baseline model to examine their capabilities in complex disaster scenarios. The results for UDA and SSL are reported in Tables 11 and 12, respectively.

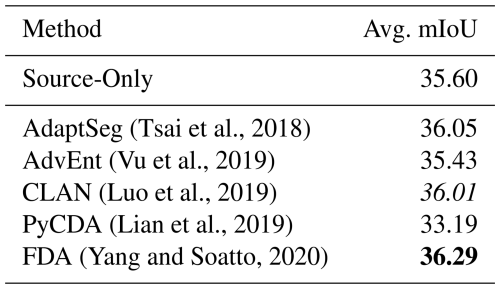

(Tsai et al., 2018)(Vu et al., 2019)(Luo et al., 2019)(Lian et al., 2019)(Yang and Soatto, 2020)Table 11Results of unsupervised domain adaptation methods adopted for zero-shot cross-event transfer setup. DeepLabV3+ (Chen et al., 2018) is used as the baseline here. Detailed mIoU on each event is listed in Table F1. The highest values are highlighted in bold, and the second-highest results are highlighted in italicized.

Table 11 presents the performance of five classical UDA methods. Compared to the source-only baseline (35.60 % mIoU), most methods achieve modest improvements, with FDA (+0.69 %) and AdaptSeg (+0.45 %) showing the most consistent gains. CLAN also performs slightly better (+0.41 %), suggesting that category-level alignment contributes positively even under a large domain shift. In contrast, AdvEnt shows negligible change, and PyCDA significantly underperforms, dropping 2.41 % mIoU below the baseline. This performance degradation indicates that approaches relying on pseudo-label refinement or curriculum learning may struggle in multimodal imagery under disaster scenarios, where spatial layout and damage semantics vary drastically across events. Overall, while UDA methods show some promise, their improvements are relatively minor and not robust across all events, underscoring the difficulty of domain alignment in EO-based damage mapping.

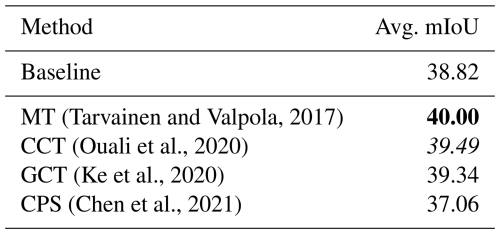

In the one-shot setting, we evaluated several popular SSL methods, as shown in Table 12. With only minimal supervision in the target event, all methods except CPS improved over the one-shot baseline (38.82 %). The best-performing method is Mean Teacher (MT), which yields a gain of +1.18 %, followed by CCT (+0.67 %) and GCT (+0.52 %). These results show that simple consistency-based teacher-student frameworks are particularly effective in leveraging unlabeled data under limited supervision, likely due to their robustness to noisy or class-imbalanced targets.

(Tarvainen and Valpola, 2017)(Ouali et al., 2020)(Ke et al., 2020)(Chen et al., 2021)Table 12Results of semi-supervised learning methods adopted for one-shot cross-event transfer setup. DeepLabV3+ (Chen et al., 2018) is used as the baseline here. Detailed mIoU on each event is listed in Table G1. The highest values are highlighted in bold, and the second-highest results are highlighted in italicized.

4.6.3 Why is cross-event transfer challenging?

To better understand why cross-event generalization is difficult, we explore two fundamental factors rooted in the nature of real-world disaster response.

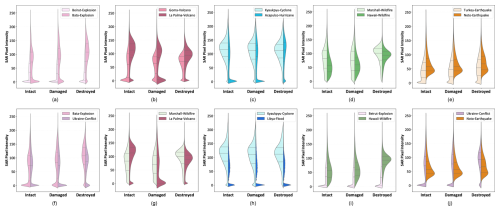

-

Inconsistent damage signatures across events. Figure 10 presents violin plots of SAR backscatter values for intact, damaged, and destroyed buildings across multiple event pairs. These plots reveal two key observations. First, even within the same disaster category (the first row), the pixel intensity distributions for damaged and destroyed buildings differ significantly between events. This indicates that SAR-based damage signatures are inconsistent across locations, possibly due to differences in urban layout, building materials, or sensor incidence angles. Secondly, the distribution shift becomes more pronounced across different types of disasters (the second row). For example, the signatures of destroyed buildings in wildfires notably differ from those in volcano eruptions, which also differ from floods or hurricanes. These variations reflect fundamental differences in damage mechanisms: buildings burned in wildfires, submerged in floods, or collapsed in earthquakes leave very different patterns in SAR backscatter. Such distributional discrepancies make it extremely difficult for models to generalize from one event to another. A model trained on one disaster might learn class boundaries (e.g., between “Damaged” and “Destroyed”) that do not transfer well to another disaster, especially when the visual and physical properties of damage are fundamentally different.

-

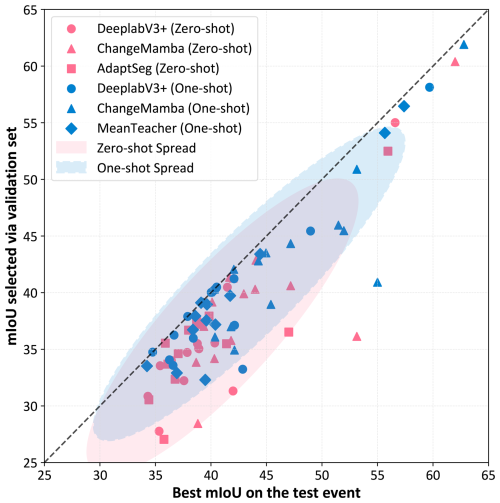

Lack of target domain supervision for model selection. Another critical challenge in cross-event setup is the absence of labeled target domain samples for model selection. In typical domain adaptation benchmarks in computer vision, a validation set of target domain is available to tune hyperparameters or select the best checkpoint. However, no such validation data can be assumed in disaster response scenarios. Figure 11 illustrates the resulting issue: the gap between the actual best mIoU on the test event and the mIoU of the checkpoint selected using a source domain validation set for several representative models under both zero-shot and one-shot settings. In the zero-shot setting, this performance gap is substantial across most models. This indicates that relying on source domain validation leads to suboptimal model selection due to poor reflection of the target distribution. The one-shot setting helps reduce this gap by enabling limited target-aware selection. However, the issue is not entirely resolved. Even with a few labeled samples, model instability and domain shift still make selection challenging.

Together, these two findings highlight that cross-event transfer is hindered not only by semantic and statistical shifts across disaster types but also by operational constraints that prevent ideal training and tuning. Future studies should consider both aspects, i.e., developing models that are robust to distributional variance and designing selection strategies that do not depend on target supervision, such as self-validation, early stopping heuristics, or domain-agnostic performance proxies (Yang et al., 2023).

Figure 10The comparison of pixel distribution of different categories in SAR images for different events. The first row compares different events with the same disaster type. (a) Explosion. (b) Volcano eruption. (c) Wildfire. (d) Cyclone/Hurricane (e) Earthquake. The second row compares different disaster types. (f) Explosion vs Conflict. (g) Wildfire vs Volcano Eruption. (h) Cyclone vs Flood. (i) Explosion vs Wildfire. (j) Conflict vs Earthquake.

Figure 11Comparison of models' best performance (mIoU) on test events versus the best checkpoints selected on validation sets under the cross-event transfer setting. Each point represents the performance on a single test event under cross-event transfer. The farther a point lies from the diagonal line, the larger the gap between the model's selected performance and its true upper bound.

4.7 Evaluation of unsupervised multimodal change detection methods

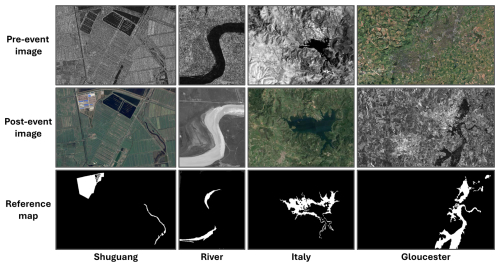

Unsupervised multimodal change detection (UMCD) plays a crucial role in post-disaster assessment by enabling rapid analysis of affected areas without requiring labeled data or prior model training (Lv et al., 2022). Current studies are mainly conducted on toy datasets with limited geographic diversity and scene complexity. These datasets are for general land cover changes. Whether these methods work in real-world disaster occurrence/building damage scenarios is still unknown. Bright offers a new opportunity to evaluate UMCD methods to provide insights into their robustness and scalability in disaster response scenarios. Six representative UMCD methods (Sun et al., 2021; Chen et al., 2022b, 2023; Han et al., 2024; Sun et al., 2024a; Liu et al., 2025) are evaluated in this work. The experimental setup is described in Appendix H.

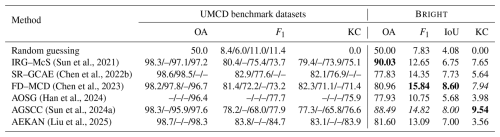

(Sun et al., 2021)(Chen et al., 2022b)(Chen et al., 2023)(Han et al., 2024)(Sun et al., 2024a)(Liu et al., 2025)Table 13Results of representative unsupervised multimodal change detection methods. KC is the acronym of kappa coefficient. The highest values are highlighted in bold, and the second-highest results are highlighted in italicized. The accuracies on the UMCD benchmark dataset are the accuracies on the four datasets presented in Fig. H1, obtained from their literature. Details of methods and benchmark datasets are presented in Appendix H. The random guessing baseline is included to indicate the performance floor under the UMCD setup. The “–” symbol indicates that the corresponding method did not report results on that dataset in their original publications.

Table 13 presents the performance of UMCD methods on Bright and UMCD benchmark datasets. To provide a baseline for reference, we also include a random guessing result, representing the performance floor under the UMCD setup. The UMCD benchmark datasets1 are detailed in Fig. H1 in Appendix H. Although these methods achieved considerable performance on existing UMCD benchmark datasets, their performance suffers noticeable declines on Bright. For instance, while they achieved F1 scores between 70 %–85 % on existing benchmarks, their F1 scores dropped to 20 % on Bright. This dramatic performance gap underscores the limitations of current UMCD research and highlights the challenges posed by real disaster scenarios. We identify three primary reasons for this decline:

-

Limitations in traditional UMCD datasets. The existing UMCD datasets consist of only a handful of image pairs, often depicting simple land cover changes, such as urban expansion and deforestation, with relatively low-resolution imagery and limited geographic diversity, as shown in Fig. H1 in Appendix H. These datasets fail to capture the complexity and variability found in real-world disaster scenarios. In contrast, Bright provides thousands of VHR multimodal image pairs across different types of disasters, significantly increasing the diversity of test cases and making it a more challenging benchmark for UMCD model evaluation.

-

Interference from non-disaster changes. Unlike previous UMCD benchmarks, where general land cover changes are the primary detection target, changes such as vegetation growth, water body shifts, and urban development may introduce noise and interfere with damage detection in real-world disaster scenarios. While prior UMCD studies treat such changes as valid detection targets, Bright requires methods to differentiate true building structural damage from irrelevant land cover variations, posing a unique challenge to current approaches.

-

Problematic evaluation protocol. Current UMCD research follows a problematic evaluation protocol where models are trained, hyperparameters are tuned, and then validated on the same dataset. This approach can actually lead to overfitting, artificially making the model look very accurate but not generalizable to real-world scenarios. In a real-world disaster scenario, there are no labeled samples available for hyperparameter tuning, which makes the current practice unrealistic. Bright exposes this limitation, as models now need to be trained or tuned before being tested directly on new, unseen data, requiring truly generalizable and adaptive learning strategies.

The results have highlighted the challenges of applying existing UMCD models to real-world disaster scenarios. Bright reveals significant limitations in current methodologies and presents new opportunities for future UMCD research.

4.8 Evaluation of unsupervised multimodal image matching methods

Precise image alignment is a critical prerequisite for any multimodal EO application. Bright offers a unique opportunity to evaluate the performance of existing UMIM algorithms under realistic, large-scale disaster conditions. Due to the lack of real pixel-level ground truth correspondences, we adopt a proxy evaluation strategy using manually selected control points (as shown in Fig. C1 in Appendix C) as references. These points were selected by EO experts to represent identifiable and stable features across modalities. While this does not constitute absolute ground truth, using such human-verified correspondences provides a valuable reference. This allows us to assess how closely automated methods approximate human matching ability under multimodal and disaster conditions.

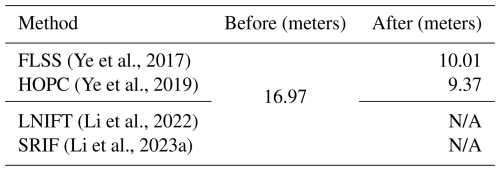

(Ye et al., 2017)(Ye et al., 2019)(Li et al., 2022)(Li et al., 2023a)Table 14Registration performance of different UMIM methods on the scene of Noto-Earthquake-2024. Note that the offsets here are in meters and are calculated based on the geo-coordinates of control points manually selected by EO experts. N/A means that the method is unable to complete the registration task.

Table 14 presents the quantitative results of four UMIM methods on the Noto-Earthquake-2024 scene. These methods fall into two main categories: feature-based methods (LNIFT (Li et al., 2022) and SRIF (Li et al., 2023a)), which rely on sparse keypoint detection and matching, and area-based methods (FLSS (Ye et al., 2017) and HOPC (Ye et al., 2019)), which operate on local regions to estimate correspondences. A detailed description of each method is provided in Appendix I. The experimental results reveal that traditional feature-based methods fail to achieve successful automatic registration. We attribute this limitation to the large spatial extent of the scenes and the drastic cross-modal differences, which make direct keypoint matching highly error-prone and unreliable.

In contrast, area-based methods, which rely on the similarity in a local region rather than point correspondence at the global level, can partially mitigate registration errors and achieve relatively more stable performance. However, these methods still face a critical limitation: they typically perform local matching within a fixed search window. This strategy is inherently inadequate for handling large displacements, especially in SAR imagery over mountainous or uneven terrain, where terrain-induced distortions can cause substantial pixel shifts that far exceed the local search range. Thus, automatic methods may serve as a useful auxiliary tool for preliminary matching or candidate generation, but human expertise remains indispensable for ensuring precise and reliable alignment in operational scenarios. We hope that Bright and its challenging real-world scenarios will inspire the development of new UMIM methods that are more robust to large-scale scene variations, sensitive to terrain-induced distortions, and ultimately capable of reducing human efforts in future operational EO-based disaster response workflows.

5.1 Limitation of Bright

We begin this subsection by acknowledging that the composition of the Bright dataset is fundamentally shaped by practical constraints in data availability. While Bright represents a significant step forward in assembling a large-scale, multimodal, and globally distributed dataset for disaster response, it is important to recognize several inherent limitations. These limitations arise not only from the scarcity of open-access VHR SAR imagery, especially over disaster-affected regions, but also from the challenges of manual annotation and the uneven distribution of events. To provide a clearer picture for potential users, we summarize these constraints in four aspects below.

-

Registration error. Bright dataset consists of optical and SAR images covering the same locations. SAR images, in particular, can be distorted and stretched in certain areas. Despite thorough preprocessing, including manual alignment and cross-checking by multiple experts in EO data processing, minor alignment errors may persist, as Table 4 suggests.

-

Label quality. The building polygons in Bright were manually annotated by expert annotators. Although manual labeling generally ensures high accuracy, minor errors in polygon boundaries are inevitable due to the complexity of building shapes and the variability in image resolution. These inaccuracies may slightly affect the performance of the models trained on Bright. Furthermore, experts assessed the extent of damage to buildings through visual interpretation of optical EO data. This process is susceptible to occasional misjudgments, contributing to label noise.

-

Sample and regional imbalance. Although Bright is rich in geographically diverse data, it has the problem of regional imbalance in the number of labels. Some of its events have more tiles and building numbers, thus, are more dominant in training and evaluation, e.g., Turkey-Earthquake-2023 (1114 tiles) v.s. Hawaii-Wildfire-2023 (65 tiles) in Table 2. To address this, we used an additional event-level evaluation method. However, the effect of events, which account for a large percentage of the sample, on the model during the training phase is still not negligible. This may affect the generalizability of the trained model in real-world disaster scenarios. In addition, all the disaster events in Bright are located near the equator or in the northern hemisphere, with no events from the southern hemisphere. This spatial bias could potentially limit the applicability of trained models to regions with different building styles, land cover patterns, or SAR imaging characteristics prevalent in the southern part of the globe.

-

Modality and temporal scope. The dataset's scope is defined by two key characteristics of the available data. First, it exclusively utilizes single-polarization SAR imagery. The current version lacks the more informative multi-polarization or dense time-series SAR data, which, if available, could enable more nuanced damage characterization and long-term recovery monitoring, respectively. Second, the dataset's temporal coverage is concentrated on events from 2020 onwards. This is a direct consequence of its reliance on modern commercial VHR SAR providers (Capella Space and Umbra), whose open-data initiatives largely commenced around that time.

Overall, despite these limitations, it is the first time such an open multimodal VHR dataset has been constructed for multimodal EO research with a large-scale and diverse disaster context.

5.2 Significance of Bright

Delays in both EO data acquisition and damage interpretation workflows often hinder timely disaster response (Ye et al., 2024). Traditional expert-driven building damage mapping is time-consuming and not scalable. While ML and DL methods offer automated alternatives, their effectiveness remains limited by the scope and quality of available training data. Most existing open-source datasets are optical, restricting the models' operational applicability in adverse weather and low-light conditions. As the first globally distributed multimodal dataset, Bright encompasses pre-event optical images and post-event SAR images. This unique combination overcomes the limitations of optical EO data by enabling models trained on Bright to monitor disaster-stricken areas regardless of weather conditions or daylight. Compared to existing building damage datasets, Bright offers several distinct characteristics: multimodal data, VHR imagery with sub-meter spatial resolution, coverage of five types of natural disasters and two human-made disasters, rich geographic diversity, and open access to the community. Due to these features, Bright is anticipated to serve as a benchmark for many future studies and practical disaster relief applications.

Beyond building damage assessment, Bright can also support several research directions within the EO and vision community. In this work, we have applied Bright to evaluate the performance of several UDA and SSL methods for cross-event transfer. We also demonstrated its applicability to UMCD and UMIM, showcasing its versatility as a benchmark for multiple EO challenges under real-world constraints. Furthermore, the dataset provides a strong foundation for broader multimodal EO research. Its high-quality annotations and geographic diversity directly apply to tasks, such as building footprint extraction, land cover mapping, height estimation, and EO-based visual question answering (VQA). Researchers can also repurpose or extend Bright to create task-specific benchmarks, enabling flexible experimentation across tasks. v is also well-positioned to support the development of EO-based foundation models, large-scale pre-trained models designed to generalize across sensors, tasks, and regions (Wang et al., 2023; Hong et al., 2024). Its rich combination of modalities, spatial detail, and contextual diversity provides the data diversity required to build such general-purpose foundation models. This contribution is significant as the field moves toward creating versatile, scalable AI models that can be applied across different types of EO data and disaster scenarios (Li et al., 2024).

Looking ahead, Bright can be further enhanced by incorporating additional modalities. For example, the inclusion of fully polarimetric SAR data would enable more nuanced damage classification than current single polarization SAR data by characterizing the different scattering properties of building materials and debris. Meanwhile, LiDAR data would offer precise 3D information to directly quantify structural collapse and enable a truly terrain-aware analysis. Future versions may also help fill current geographic gaps, including southern hemisphere regions, to ensure more globally representative coverage. Ultimately, we envision that Bright, true to its name, will bring even a glimmer of brightness to people in disaster-stricken areas by enabling more prompt and effective disaster response and relief.

The Bright dataset is available at https://doi.org/10.5281/zenodo.14619797 (Chen et al., 2025a). The code for training and testing benchmark methods (including code related to IEEE GRSS DFC 2025) is accessible at https://doi.org/10.5281/zenodo.17569363 (Chen et al, 2025c). Models' checkpoints can be downloaded at https://doi.org/10.5281/zenodo.15349461 (Chen et al., 2025b).

In this paper, we introduced Bright, the first globally distributed multimodal dataset with open access to the community, covering 14 natural and human-made disaster events. Bright includes pre-event optical and post-event SAR images with sub-meter spatial resolution. Beyond introducing the dataset, we conducted a comprehensive series of experiments to validate its utility. We benchmarked several state-of-the-art supervised learning models under a standard machine learning data split. Moreover, we extended the evaluation to a cross-event transfer setting, simulating real-world scenarios where no or limited target annotations are available. Furthermore, we assessed the performance of unsupervised domain adaptation, semi-supervised learning methods, unsupervised multimodal change detection, and image matching techniques. The findings serve as performance baselines and provide valuable insights for future research in DL model design for real-world disaster response. Bright is an ongoing project, and we remain committed to continuously enhancing its diversity and quality by incorporating new disaster events and refining the existing data. Our objective is to improve Bright's utility for practical disaster response applications at all levels (regional, national, and international) and research in the community.

A1 Explosion in Beirut, 2020