the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

A new satellite-derived dataset for marine aquaculture areas in China's coastal region

Yongyong Fu

Jinsong Deng

Hongquan Wang

Alexis Comber

Wu Yang

Wenqiang Wu

Shixue You

Yi Lin

Ke Wang

China has witnessed extensive development of the marine aquaculture industry over recent years. However, such rapid and disordered expansion posed risks to coastal environment, economic development, and biodiversity protection. This study aimed to produce an accurate national-scale marine aquaculture map at a spatial resolution of 16 m, using a proposed model based on deep convolution neural networks (CNNs) and applied it to satellite data from China's GF-1 sensor in an end-to-end way. The analyses used homogeneous CNNs to extract high-dimensional features from the input imagery and preserve information at full resolution. Then, a hierarchical cascade architecture was followed to capture multi-scale features and contextual information. This hierarchical cascade homogeneous neural network (HCHNet) was found to achieve better classification performance than current state-of-the-art models (FCN-32s, Deeplab V2, U-Net, and HCNet). The resulting marine aquaculture area map has an overall classification accuracy > 95 % (95.2 %–96.4, 95 % confidence interval). And marine aquaculture was found to cover a total area of ∼ 1100 km2 (1096.8–1110.6 km2, 95 % confidence interval) in China, of which more than 85 % is marine plant culture areas, with 87 % found in the Fujian, Shandong, Liaoning, and Jiangsu provinces. The results confirm the applicability and effectiveness of HCHNet when applied to GF-1 data, identifying notable spatial distributions of different marine aquaculture areas and supporting the sustainable management and ecological assessments of coastal resources at a national scale. The national-scale marine aquaculture map at 16 m spatial resolution is published in the Google Maps kmz file format with georeferencing information at https://doi.org/10.5281/zenodo.3881612 (Fu et al., 2020).

- Article

(13368 KB) - Full-text XML

-

Supplement

(243 KB) - BibTeX

- EndNote

Marine aquaculture, which refers to the breeding, rearing, and harvesting of aquatic plants or animals in marine waters, has significant potential for food production, economic development, and environmental protection in coastal areas (Burbridge et al., 2001; Campbell and Pauly, 2013; Gentry et al., 2017). It has become a fast-growing industry in China due to the significant increase in the demand for seafood, support from policies, and technology innovation (Liang et al., 2018). The marine aquaculture production in China has increased from 10.6 million metric tons in 2000 (Bureau of Fisheries of the Ministry of Agriculture, 2001) to 20.7 million metric tons in 2019 (Bureau of Fisheries of the Ministry of Agriculture, 2020). However, such rapid and disordered growth may cause severe economic losses and environmental problems, such as water pollution (Tovar et al., 2000), biodiversity decrease (Galil, 2009; Rigos and Katharios, 2010), and marine sediment pollution (Porrello et al., 2005; Rubio-Portillo et al., 2019). Therefore, accurate mapping and monitoring of marine aquaculture can provide evidence to support the sustainable management of coastal marine resources.

Previous research in this domain can be grouped into visual interpretation, analyses enhanced by including ancillary data such as information about spatial structure, object-based image analysis (OBIA), and deep-learning-based methods. Visual interpretation is used less frequently as it requires too much time and effort. Enhanced analyses that incorporate features such as texture or average filtering (Fan et al., 2015; Lu et al., 2015; Xiao et al., 2013) are commonly employed for pixel-based approaches. However, these are subject to noise (the salt-and-pepper effect) and decreased accuracy (Zheng et al., 2017). OBIA has been widely used for the detailed interpretation of marine aquaculture from remote sensing images (Fu et al., 2019a; Wang et al., 2017; Zheng et al., 2017). It first partitions the image into segments and then classifies segments based on their internal properties (Blaschke et al., 2014). However, since almost all these methods are proposed based on the handcrafted features, it is inherently difficult for them to achieve balance between high discriminability and good robustness (L. Zhang et al., 2016). To solve such problems, the remote sensing community has started to incorporate deep fully convolutional neural networks (FCN) within marine aquaculture detection tasks using high-spatial-resolution (HSR) images at local scales (Cui et al., 2019; Fu et al., 2019b; Shi et al., 2018). However, the opportunities associated with analyses of the high volumes of publicly available and free remote sensing data at medium-resolution, such as Landsat, Sentinel-2 A/B, and GaoFen-1 wide-field-of-view (GF-1 WFV) imagery, have not been exploited. Therefore, it is necessary to develop a detection system applying deep FCNs to such data to provide more reliable and effective mapping and monitoring over wider areas, supporting evaluations of marine aquaculture areas at a national scale.

However, there are several critical limitations for accurate mapping of marine aquaculture areas using deep FCN-based methods when applied to medium-resolution data. The first is the coexistence of multi-scale objects, such as the large sea areas as well as small aquaculture areas, making it difficult to focus FCN on small marine aquaculture objects. A common approach is to use inputs of different sizes from the original images (Eigen and Fergus, 2015; Liu et al., 2016; Zhao and Du, 2016) to ensure that different object sizes are prominent in different parts of the FCN structure, but such methods take more time due to the repetitive sampling of the input imagery. Some researchers have generated multi-scale features using atrous convolution (Chen et al., 2018) or pooling operations at different scales (He et al., 2015; Zhao et al., 2017). However, such approaches may be limited to a certain range of receptive fields, as operations may be applied to invalid zones when pooling with a larger pooling size or atrous convolution with a higher atrous rate. The second critical limitation is that the final features may have a smaller size than the input imagery due to consecutive pooling operations in FCN, making it hard to identify land cover details. To solve this problem, researchers have used deconvolution operation (Noh et al., 2015) or fused features (Pinheiro et al., 2016; Ronneberger et al., 2015), but FCN may fail to identify relatively small marine aquaculture areas. Finally, some researchers have tried to refine the classification approach by including known boundaries (Bertasius et al., 2016; Fu et al., 2019c; Marmanis et al., 2018), but such methods require additional classification steps to perform boundary extraction.

In conclusion, although present methods have been successfully applied for dense classifications, the challenge of using them to accurately extract the marine aquaculture areas from medium resolution images at a national scale remains. To overcome these limitations, we proposed a novel framework for the large-scale marine aquaculture mapping. The main contributions of our study can be summarized as follows.

-

We present a unified framework based on convolution neural networks (CNNs) for national-scale marine aquaculture extraction.

-

A hierarchical cascade homogeneous neural network (HCHNet) model is proposed to learn discriminative and robust features.

-

We provide the first detailed national-scale marine aquaculture map with a spatial resolution of 16 m.

The rest of the paper proceeds as follows. Section 2 briefly presents a description of the study area and different types of marine aquaculture. Section 3 introduces the input data and method to develop the proposed deep learning architectures, implementation details, and methodological choices. The results are presented in Sect. 4. Section 5 then discusses the methods and the limitations of using deep learning methods with medium-resolution data, and finally, Sect. 6 concludes the paper.

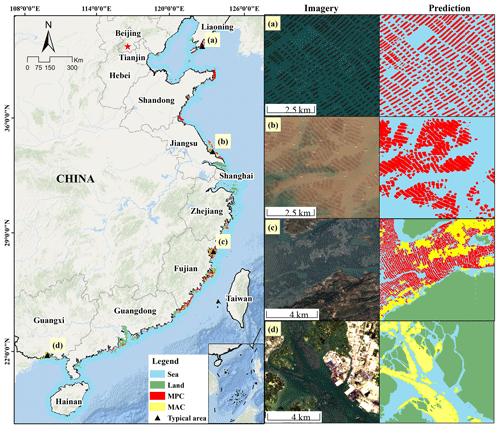

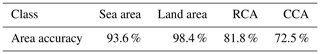

The study area included all of the potential marine aquaculture areas in China's coastal regions (Fig. 1). Due to the large amount of coastline and associated resources, many coastal marine aquaculture areas have rapidly developed in coastal regions. After a visual inspection on the HSR images from Google Earth, we empirically set the width of the study area along with the coastal line for detection as 30 km. According to the types of cultivated aquatic products, the marine aquaculture areas in China can be classified into marine animal culture (MAC) areas and marine plant culture (MPC) areas.

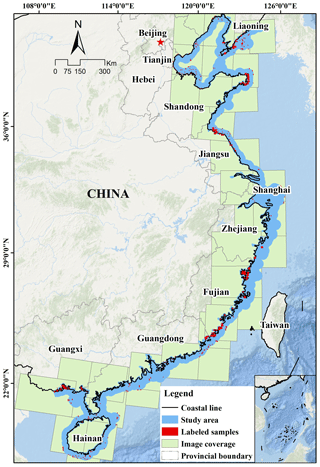

MAC areas are cultured with marine animals, such as fish, crustaceans, shellfish, etc., in connected cages (Fig. 2k), or wooden rafts (Fig. 2d). Most of the cages and rafts are small (normally 3 m × 3 m or 5 m × 5 m in size) and simple in form (normally square). The materials used to construct these cages are collected locally and include bamboo, wooden boards, plastic foam floats, and polyvinyl chloride or nylon nets. Because of the low investment costs and ease of construction, farmers typically make the cages themselves. As they cannot withstand waves generated by typhoons or sea currents, most cages must be installed in inshore waters and sheltered sites (Fig. 2c, i).

MPC areas are generally cultivated with seaweed, such as kelp, Undaria, Gracilaria, etc. Most of the seaweed is twisted around ropes about 2 m in length. The ropes are linked or tied to one (Fig. 2f) or two floating lines (Fig. 2j), which are about 60 m long and kept at the sea surface by buoys made from foam or plastic and anchored by lines tied to wooden pegs driven into the sea bottom. As most of the MPCs are submerged in the sea water, the features of MPC in remotely sensed images are usually influenced by different environments (Fig. 2b, e, g, h, j), making it difficult for classification.

Figure 1Location of the study area, the spatial distribution of labeled samples, and acquired GF-1 wide-field-of-view (WFV) image swaths in our study.

Figure 2Location of the sampling points (a). Image examples of typical marine aquaculture areas on ground or from high-spatial-resolution (HSR) images. (b, e, g, h, i) Marine plant culture (MPC) areas from HSR images. (c, i) Marine animal culture (MAC) areas from HSR images. (d, k) Photos of MAC areas on ground. (f, j) Photos of MPC areas on ground.

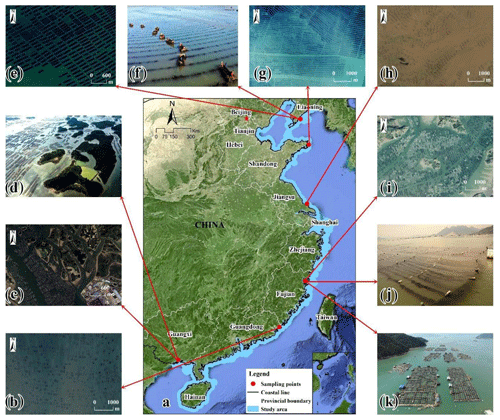

Due to the large number of factors that could potentially affect classification performance, implementation of the FCN-based method at the national scale is a challenge. To reduce the influence of various land covers, we used the coastal line vector (Chuang et al., 2019) to exclude mainland areas after preprocessing all the input images (Fig. 3); then, we produced the marine aquaculture map by utilizing the HCHNet method, which was trained and tested on a dataset validated by field survey or HSR images.

Figure 3Schematic flow chart of the marine aquaculture mapping (ROI: region of interest; HCHNet: hierarchical cascade homogeneous neural network).

3.1 Data and preprocessing

In this study, images from the WFV sensors of GF-1 were selected as the primary data source. The GF-1 satellite, which is the first satellite of the China high-resolution earth observation satellite program, was launched by the China Aerospace Science and Technology Corporation in April 2013. This satellite carries four integrated WFV sensors, providing multi-spectrum data with a 2 d revisit cycle and a swath width of 800 km when the four sensors are combined. Each WFV sensor has four multi-spectral bands at 16 m spatial resolution: B1 (450–520 nm, blue), B2 (520–590 nm, green), B3 (630–690 nm, red), and B4 (770–890 nm, near infrared). Compared with other frequently used medium-resolution satellite imagery (e.g., Landsat, Sentinel), the wide coverage ability, high-frequency revisit time, and 16 m spatial resolution of the data significantly improve the capabilities for large-scale marine aquaculture area observation and monitoring. A total of 35 quantified GF-1 WFV images spanning the 2016–2019 period were finally selected from the China Centre for Resources Satellite Data and Application to cover the whole coastal region in China and to filter for cloud coverage (Fig. 1). The product filenames are listed in Table S1 of the Supplement.

The images were projected into the UTM map projection, and atmospheric correction was undertaken using the FLAASH atmospheric correction model embedded in the ENVI software (v5.3.1). The “Maritime” model was set as Aerosol Model. And all the other parameters can be automatically set by using the extension tools (https://github.com/yyong-fu/ENVI_FLAASH_EasyToUse, last access: 27 April 2021). A 30 km buffer was used to extract the images of the coastal areas, and the final set of clipped images consisted of four image bands at a spatial resolution of 16 m, used as inputs in the following parts.

3.2 Hierarchical cascade homogeneous neural network

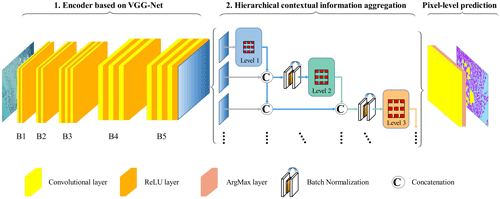

As shown in Fig. 4, the proposed HCHNet is an FCN-based neural network, which can be trained and applied to a large area in an end-to-end way. Specifically, a homogeneous CNN was designed to extract high-dimensional features from the input images. A hierarchical cascade structure was followed to extract multi-scale contextual information gradually based on high-dimensional features. The following subsections introduce three important components of the proposed HCHNet method, including (1) an encoder based on a homogeneous CNN, (2) hierarchical cascade structure, and (3) a loss function.

3.2.1 Encoder based on a homogeneous CNN

Traditional CNN uses down-sampling process to improve the local invariance, and the prediction results are usually only labels at the patch level. For semantic segmentation, FCN can enlarge the down-sampling feature maps to full-sized outputs by using interpolation (Badrinarayanan et al., 2017) or deconvolution (Noh et al., 2015). However, foreground objects, such as the marine aquaculture areas in our study, occupy a smaller portion of the GF-1 WFV images than in the natural images, making it hard for the FCN to recover the details missing from consecutive pooling operations via learning.

As representations with high resolution are important for the preservation of detailed information, the homogeneous CNN (Shi et al., 2018) was used as the encoder. One of the advantages of the homogeneous CNN is that it retains the full resolution of features by removing all of the pooling operations. As shown in Fig. 4, we built the encoder based on the widely used VGG16 model. The VGG16 is constructed of 13 convolutional layers and followed by three fully connected layers. To preserve the spatial information and control the model size, the fully connected layer was removed and convolutional kernels in the corresponding layers were reduced. As a result, the encoder can preserve full-resolution features as the input image.

3.2.2 Hierarchical cascade structure

Although removing pooling operations can preserve more detailed information, it can also decrease the receptive field of the underlying neural network (Liu et al., 2018). In this case, with fixed and limited receptive fields, it may cause more misclassifications because of the loss of multi-scale contextual information. To solve this problem, the hierarchical cascade structure proposed in a previous study (Fu et al., 2019b) was used. This structure generally enlarges the receptive field and increases the sampling rate by creating a hierarchical cascade structure using the atrous convolution layers (as shown in the central part of Fig. 4). To reduce memory usage, batch normalization operations were used to replace the attention modules, allowing feature maps from different levels to be concatenated and easing the training process (Ioffe and Szegedy, 2015). Each atrous convolution layer in the proposed structure is formulated as follows:

where Fo denotes the feature maps from the output of our encoder network. denotes an atrous convolution operation with the kernel size of K×K and dilation rate of d at the l level. Fl () denotes the features at the l level in the structure. “(C)” denotes the concatenation operation. “L(⋅)” denotes the batch normalization. Dl denotes the dilation rate value at the l level.

3.2.3 Loss function

A significant problem during the training of FCN is the imbalance of classes. Such imbalances can make training inefficient, with relatively small marine aquaculture areas contributing little to the model training process. In contrast, much of the coastal area contains negative samples, such as the sea area, which may dominate the training process and decrease marine aquaculture identification accuracy. To address this class imbalance problem, Eigen and Fergus (2015) proposed reweighting each class based on a loss calculation. Following this idea, the weighted loss was used to deal with the class imbalance problem and to allow effective training of all examples. The loss was defined as

where represents the ground truth class, is the predictive probability from the model for the classes, and αi represents the weight for each class.

3.3 Implementation details

As shown in Fig. 4, the encoder was first applied, which is a homogenous CNN with 13 layers (Table 1), to produce high-dimensional and abstract features with full resolution from the input imagery. And then, multi-scale contextual information was captured by using the hierarchical cascade structure with atrous rates of 3, 6, and 9. To regulate the model's memory consumption and to prevent it from growing too wide, 1×1 kernels were employed in the hierarchical cascade structure to keep all the channels of concatenated features fixed to 128, which have the same output feature maps of other atrous convolution layers.

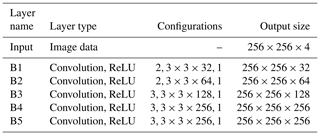

Table 1Detailed configuration of the encoder in the proposed HCHNet method. (l, , s) of configurations means there are l convolution layers with n convolution kernels, and their size is k×k and stride is s. of the output size means the output image or feature maps have a height of h, a width of w, and a channel of c.

As for the training and testing of HCHNet, we selected a total of 705 patches. Each one of them has non-overlapping 256×256 pixels from raw images (Fig. 1). The ground truth maps for each patch were obtained by visual interpretation. From them, we randomly selected 80 % to construct the training dataset. Considering the relatively small training dataset, data augmentation was applied to make the training process more effective and reduce overfitting: each patch was flipped in the horizontal and vertical directions and was rotated counterclockwise by 90∘. As a result, there were 4656 patches that can be used for the training of HCHNet.

In the experiment, we trained the HCHNet for 30 epochs using a batch size of 4 and the Adam optimizer. The Adam parameters were set as β1=0.9, β2=0.999, and a learning rate of 0.0001 was used. The HCHNet was built and implemented using the Keras (v2.2.4) on top of TensorFlow (v1.8.0). All of the experiments were undertaken on a computer with a graphics processing unit (GPU) of NVIDIA GeForce GTX 1070.

3.4 Comparing methods and accuracy assessment

3.4.1 Comparing methods

To assess the effectiveness and advantage of our proposed method, we provided a comparison with four state-of-the-art FCN-based methods. We summarized the main information as follows.

FCN-32s is the first FCN-based method proposed by Long et al. (2015) for semantic segmentation. It was constructed based on the VGG16, in which the original fully connected layers are convolutionized. The model predicts classification results by upsampling the final feature maps 32 times directly. It does not use any structure to extract multi-scale feature maps or get more detailed information from the shallow layers. Thus, it can be used as a baseline model for our proposed HCHNet methods.

U-Net is a typical FCN-based model with encoder–decoder structure,which was proposed by Ronneberger et al. (2015) for semantic segmentation of medical images. The encoder has a similar structure to VGG16. Different from the FCN 32s, U-Net combined the feature maps in the decoder and mirrored feature maps in the encoder by using long-span connections to provide precise localization and high classification accuracy.

Deeplab V2 (VGG16 as the backbone) was proposed by Chen et al. (2018) for semantic segmentation, which used the atrous spatial pyramid pooling (ASPP) structure to capture multi-scale contextual information, and they then used the fully connected conditional random fields (CRFs) as a post-processing tool to refine the prediction results.

HCNet was proposed by Fu et al. (2019b) to map the detailed spatial distribution of marine aquaculture from HSR images. This model has a variant of VGG16 as an encoder, in which the stride and padding of the last two pooling layers are set as one for high-resolution feature maps. It combines the long-span connections, while also combining a hierarchical cascade structure.

The above models are suitable for classification and comparison purposes, because nearly all of these methods are VGG16-based neural networks and employed typical structures for multi-scale information extraction. In the training phase, all of the above models, including the proposed HCHNet, were trained from scratch using the same patches and experimental settings as in the HCHNet method.

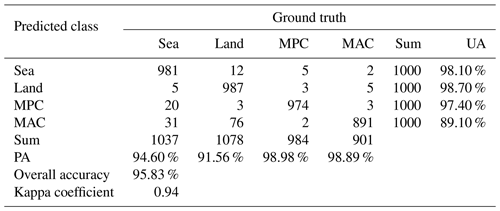

3.4.2 Accuracy assessment and comparison

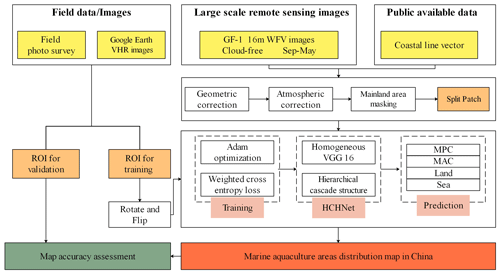

To ensure representativeness of each class in the whole sample population for accuracy assessment, we followed the widely used random stratified sampling method (Padilla et al., 2014; Ramezan et al., 2019) to generate 4000 randomly selected points in the coastal zone. Based on the visual interpretation results from HSR images, several commonly used accuracy statistics were calculated from the error matrix, such as producer accuracy (PA), user accuracy (UA), overall accuracy (OA), and the kappa coefficient. Meanwhile, we also conducted the area accuracy assessment (the percentage of overlapping areas in the ground truth) based on more than 120 randomly selected 256×256 patches, which accounts for nearly 20 % of the total samples.

After that, we compared the performances of our proposed method with four state-of-the-art FCN-based models. The accuracy comparison was undertaken using the test dataset. To provide a quantitative assessment between our proposed method and other methods, we calculated the widely used F1 score (F1), precision, and recall (POWERS, 2011) as follows:

where TP is the number of true positives, FP is the number of false positives, and FN is the number of false negatives.

To compare different models' discriminate ability for the marine aquaculture areas, these accuracy values were calculated for each class, and the mean F1 values of the MAC and MPC areas were used to assess the performance of the different methods.

4.1 Spatial distribution of marine aquaculture areas in China

The final classification results are shown in Fig. 5, with the corresponding Google Maps kmz file published at https://doi.org/10.5281/zenodo.3881612 (Fu et al., 2020). The prediction results of typical areas (Fig. 5a–d) demonstrate the applicability and robustness of the HCHNet method to different marine aquaculture areas (i.e., MPC and MAC) over different study sites (i.e., from Liaoning to Guangxi provinces).

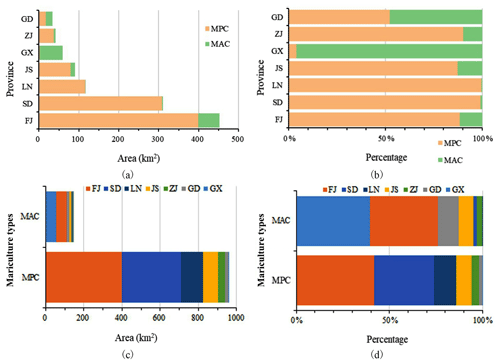

According to the classification results, the total area of marine aquaculture in China is approximately 1103.67 km2. As can be seen from Fig. 6a, marine aquaculture is mainly distributed in the coastal areas of Fujian, Shandong, Liaoning, and Jiangsu provinces. Fujian and Shandong provinces have the largest areas of over 300 and 450 km2, respectively. Furthermore, nearly 100 km2 of marine aquaculture areas is found in Liaoning and Jiangsu provinces. Figure 6b shows that over 85 % of the marine aquaculture areas in these four coastal areas are MPC. Figure 6b also shows that the provinces in north China, such as Liaoning and Shandong, tend to have more MPC areas, with the provinces in south China having more MAC areas.

Figure 6c shows that most of the marine aquaculture areas in China are MPC areas, with an area of over 950 km2, 6 times larger than the MAC area. Guangxi and Fujian provinces have the largest areas of MAC, which account for more than 70 % of the total MAC areas in China (Fig. 6d). The largest areas of MPC are found in Fujian and Shandong provinces, accounting for more than 70 % of the total MPC areas in China.

4.2 Accuracy assessment of the marine aquaculture area map in China

To quantitatively evaluate the classification performance of our proposed HCHNet method, we used the random stratified sampling method to perform the evaluation. A total of 4000 reference pixels were randomly selected, with 1000 pixels from the classification results for each class. We then obtained the ground truth of each point by visual interpretation based on HSR images from Google Earth point by point.

As shown in Table 2, the error matrix shows that the overall accuracy was 95.83 %, and the kappa coefficient was 0.94. The land and sea areas get the highest classification accuracy with both of the PA and UA values greater than 91 %. The MPC areas have relatively high PA and UA values of approximately 95 %. Most of the misclassifications of MPC areas are related to the sea area. This is because the MPC areas are submerged in a complex sea environment, which can easily be affected by waves, seafloor topography, shadows of clouds, etc. The MAC areas have a relatively lower UA value of 89.1 %, which may be caused by the relatively high complexity and small numbers of training patches of MAC areas.

After that, we employed the bootstrapping (Efron and Tibshirani, 1997), which is suitable for estimating classification accuracy (Duan et al., 2020; Lyons et al., 2018), to estimate the uncertainty level. We bootstrapped the overall accuracy from 4000 independent reference points. The bootstrapping was performed for 1000 iterations, and the mean of the distribution used for the evaluation and the confidence intervals was set as the 95 % quantile. Eventually, we obtained the overall accuracy of 95.8 % (95.2 %–96.4, 95 % confidence interval). Meanwhile, we derived that the marine aquaculture area in China is 1103.67 km2 (1096.8 km2–1110.6 km2, 95 % confidence interval).

To further assess the validity of our proposed HCHNet method, we also evaluated the area accuracy (percentage of the overlapping area) based on the test dataset, including 120 randomly selected patches with a size of 256×256, which accounts for 20 % of the labeled samples. As shown in Table 3, the land and sea areas have the best classification accuracy with area accuracy values greater than 93 %. Meanwhile, the MPC and MAC also have relatively high area accuracy values of 81.8 % and 72.5 %, respectively.

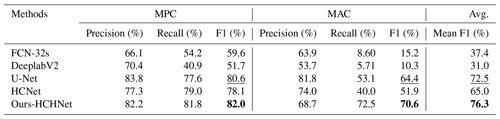

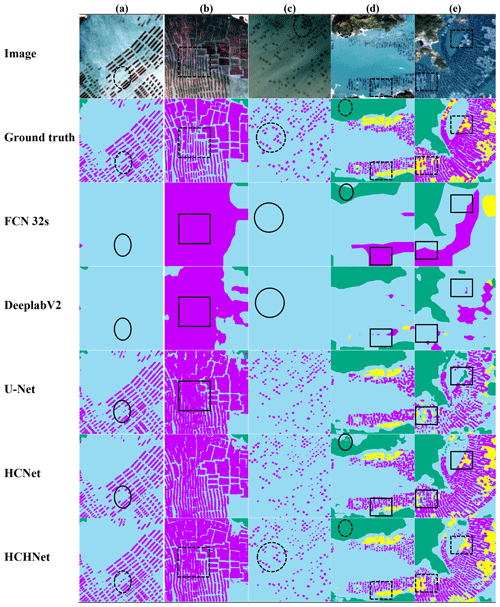

4.3 Comparison with the state-of-the-art methods

To assess the quality of the proposed HCHNet method, the performance was compared with the results from other state-of-the-art FCN-based methods, using the same test dataset. As can be seen from the prediction results in Fig. 7, FCN-32s and Deeplab V2 are unable to reliably identify marine aquaculture, especially the small and isolated marine aquaculture areas (Fig. 7b, d, e), with much coarser predicted results than other approaches. HCHNet identified more MPC and MAC areas than U-Net and HCNet (Fig. 7a, d, e) and also identified detailed information, even with the narrow channels among neighboring MPC areas (Fig. 7b, e).

Table 4Quantitative comparison of MPC and MAC areas between our method and other methods, where the best accuracy values are in bold (%), and the second best are underlined.

Figure 7The classification results of MPC and MAC areas comparing the proposed HCHNet method with other approaches. The black solid outlined areas indicate where HCHNet obtains better results. The dotted line shows same locations in other images. The purple, yellow, blue, and green areas in the classification maps represent the MPC, MAC, sea, and land areas, respectively.

To provide a quantitative comparison, several commonly used accuracy metrics were calculated from the test dataset for MAC and MPC areas. Table 4 shows that the FCN-32s and Deeplab V2 achieved similar accuracy values, with mean F1 values less than 40 %. The U-Net and HCNet achieved a similar classification performance, with mean F1 values of approximately 70 %. Compared with these state-of-the-art methods, our proposed HCHNet approach obtained the best classification performance, with a mean F1 value of 76.3 %. In addition, the HCHNet also achieved a good balance between precision and recall values of MAC, identifying more accurate and existing MAC areas. The difference between them is less than 4 % for the HCHNet, while the difference values of other methods are more than 28 %.

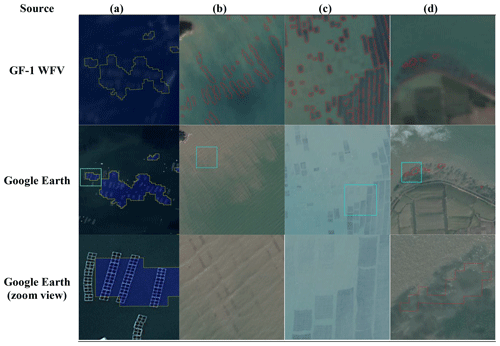

Figure 8Illustration of potential sources of error in the HCHNet algorithm: the boundaries of relatively small and separate MAC (a) or MPA (b) areas are difficult to accurately identify. (c) Harvested MPC areas are also difficult to detect due to shallow waters and the disappearing dark tone. (d) Vegetation located close to the water bodies may be misclassified as MPC areas. The first row highlights typical misclassified areas from GF-1 WFV data. The second row shows high-spatial-resolution images from Google Earth (© Google Maps). The third row is a zoom view of the Google Earth images in the second row (© Google Maps). The red and yellow areas indicate the classification results of MPC and MAC areas, respectively.

5.1 Date and algorithms for the mapping of marine aquaculture areas in China

This study developed a new algorithm to separate two typical marine aquaculture types based on the most advanced FCN- based models. The HCHNet was applied to medium-spatial-resolution images from China's GF-1 WFV sensors to map the marine aquaculture areas in China. The input data and the algorithm used in our study were different from current state-of-the-art methods in many ways.

First, China's GF-1 WFV sensors provide a larger number of valid image scenes that are suitable for a wide range of analyses of marine aquaculture areas with high temporal resolution. MPC areas are only visible in several specific months due to phenological development stages. However, it is difficult to capture appropriate images that clearly represent the marine culture areas in these months from other similar satellites, such as Landsat, which are frequently influenced by clouds or waves. The high temporal resolution of the GF-1 WFV data (repeats each 2 d) means that it is possible to observe marine aquaculture areas with much greater frequencies than data from other sources. Additionally, the relatively wide swath of the data makes them highly suitable for such large-scale mapping in China. In addition, it is possible to directly obtain images with 16 m spatial resolution without any additional computations, such as pan-sharpening operations, making the GF-1 WFV data a reliable data source for large-scale marine aquaculture area observation and monitoring.

Second, the proposed FCN-based HCHNet method improves the classification accuracy and efficiency. Much previous research has used OBIA approaches (Fu et al., 2019a; Wang et al., 2017) and other FCN-based methods (Fu et al., 2019b; Shi et al., 2018). The accuracy of the OBIA method depends on segmentation, which does not have universal methods for evaluating the selection of appropriate segmentation parameters (Blaschke, 2010). It also takes a large amount of time to undertake the segmentation process and to design effective features or rules for hard classifications (Zheng et al., 2017), making such approaches more difficult to be implemented operationally for national-scale studies. The proposed HCHNet achieved the best classification performance for three reasons: (1) all of the pooling operations were removed to avoid the shrinking of features, which helps improve the identification of smaller foreground objects; (2) the hierarchical structure was used to enlarge the receptive field to capture more contextual information, which is helpful for reducing the influence of local variance; and (3) the weighted loss function was employed to solve the classes' imbalance problem.

Third, masking out coastal land areas that do not intersect with marine aquaculture areas was undertaken using publicly available data and provided a simple and straightforward methodological refinement to constrain the marine aquaculture mapping. This was important because of the scale of the classification over large coastal areas in China, which contain various land covers outside of the aims of this study. Previous studies have used a threshold value (Zheng et al., 2017) to mask out these land areas, but in this study, this was done directly.

5.2 Uncertainty and limitations of the marine aquaculture map in China

Accurate mapping of marine aquaculture areas at a regional scale is challenging. There are several potential uncertainties in our methods for mapping marine aquaculture areas. First, because of the medium-spatial-resolution imagery and the relatively small size of MAC area (Fig. 8a), it is difficult to accurately identify the boundaries of small and isolated MAC areas (generally less than 10 pixels). Overestimation of MAC may occur, where the sea waters among several MAC areas are misclassified as MAC. The HCHNet failed to detect the small MPC areas (Fig. 8b) and harvested MPC areas (Fig. 8c), causing an issue of underestimation. As shown in Fig. 8d, some vegetation that is submerged or close to the sea waters may be misclassified as MPC areas, since these pixels share similar spectrum and shape features.

There are also some limitations of the proposed HCHNet approach. First, the training process requires a large number of high-quality ground truth labels, which may require much manual work and professional interpretation experience. Therefore, further research on accelerating the training or inference process through weak supervision (Lin et al., 2016; Pathak et al., 2015) or a series of model compression methods (Li et al., 2017; Yim et al., 2017; X. Zhang et al., 2016) will be undertaken to enhance the applicability of the approach. Second, our proposed method can only be used for the monitoring of marine aquaculture areas in surface water; it is unable to detect the submerged cages in some places (such as the coastal area of Shandong province in northeastern China).

The map of marine aquaculture in China's coastal zone at 16 m spatial resolution has been published in the Google Maps kmz file format with georeferencing information at https://doi.org/10.5281/zenodo.3881612 (Fu et al., 2020).

Marine aquaculture areas and the coastal environment they rely on are of significant ecological and socioeconomic value. Accurate and effective mapping approaches are imperative for the monitoring, planning, and sustainable development of marine and coastal resources across local, regional, and global scales. The increasing public availability of remote sensing data, ancillary data, and advanced computer vision algorithms together provided an effective route for identifying marine aquaculture areas at a national scale. By using the powerful and inherent self-learning mechanism of deep learning, a new algorithm was carefully designed based on the FCN structure and applied to the GF-1 WFV data. The application of this algorithm produced a marine aquaculture area map of China with an overall classification accuracy > 95 % (95.2 %–96.4, 95 % confidence interval). The total area of China's marine aquaculture areas was estimated to be approximately 1100 km2 (1096.8–1110.6 km2, 95 % confidence interval), of which more than 85 % is MPC areas. Most of the marine aquaculture areas are distributed along the coastal areas of Fujian, Shandong, Liaoning, and Jiangsu provinces. Guangxi and Fujian provinces have the largest areas of MAC, and Fujian and Shandong have the largest areas of MPC. The algorithm could be implemented at other regional and global scales with the collection of sufficient samples and the careful investigation of marine aquaculture phenology in these areas.

The supplement related to this article is available online at: https://doi.org/10.5194/essd-13-1829-2021-supplement.

YF conceived and drafted the manuscript and performed all data analysis. JD and WY founded this research. WW, SY, and YL collected remote sensing data and ground photos. AC, HW, and KW provided edits and suggestions. All authors revised the manuscript before the submission and during the review process.

The authors declare that they have no conflict of interest.

This research has been supported by the National Natural Science Foundation of China (grant no. 71974171), the Ministry of Science and Technology of China (grant no. 2016YFC0503404), the Natural Science Foundation of Zhejiang Province (grant no. LY18G030006), the Science and Technology Department of Zhejiang Province (grant no. 2018F10016), and the Natural Environment Research Council (grant no. NE/E523213/1).

This paper was edited by David Carlson and reviewed by Bo Yang and two anonymous referees.

Badrinarayanan, V., Kendall, A., and Cipolla, R.: SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation, IEEE Trans. Pattern Anal. Mach. Intell., 39, 2481–2495, https://doi.org/10.1109/TPAMI.2016.2644615, 2017.

Bertasius, G., Shi, J., and Torresani, L.: Semantic Segmentation with Boundary Neural Fields, in: 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Las Vegas, NV, USA, 27–30 June 2016, 3602–3610, 2016.

Blaschke, T.: Object based image analysis for remote sensing, ISPRS J. Photogramm. Remote Sens., 65, 2–16, https://doi.org/10.1016/j.isprsjprs.2009.06.004, 2010.

Blaschke, T., Hay, G. J., Kelly, M., Lang, S., Hofmann, P., Addink, E., Queiroz Feitosa, R., van der Meer, F., van der Werff, H., van Coillie, F., and Tiede, D.: Geographic Object-Based Image Analysis - Towards a new paradigm, ISPRS J. Photogramm. Remote Sens., 87, 180–191, https://doi.org/10.1016/j.isprsjprs.2013.09.014, 2014.

Burbridge, P., Hendrick, V., Roth, E., and Rosenthal, H.: Social and economic policy issues relevant to marine aquaculture, J. Appl. Ichthyol., 17, 194–206, https://doi.org/10.1046/j.1439-0426.2001.00316.x, 2001.

Bureau of Fisheries of the Ministry of Agriculture: China Fishery Statistical Yearbook 2001, China Agriculture Press, Beijing, China, 2001.

Bureau of Fisheries of the Ministry of Agriculture: China Fishery Statistical Yearbook 2020, China Agriculture Press, Beijing, China, 2020.

Campbell, B. and Pauly, D.: Mariculture: A global analysis of production trends since 1950, Mar. Policy, 39, 94–100, https://doi.org/10.1016/j.marpol.2012.10.009, 2013.

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., and Yuille, A. L.: DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs, IEEE Trans. Pattern Anal. Mach. Intell., 40, 834–848, https://doi.org/10.1109/TPAMI.2017.2699184, 2018.

Chuang, L., Ruixiang, S., Yinghua, Z., Yan, S., Junhua, M., Lizong, W., Wenbo, C., Doko, T., Lijun, C., Tingting, L., Zui, T., and Yunqiang, Z.: Global Multiple Scale Shorelines Dataset Based on Google Earth Images (2015), Digital Journal of Global Change Data Repository, https://doi.org/10.3974/geodb.2019.04.13.V1, 2019.

Cui, B., Fei, D., Shao, G., Lu, Y., and Chu, J.: Extracting Raft Aquaculture Areas from Remote Sensing Images via an Improved U-Net with a PSE Structure, Remote Sens., 11, 2053, https://doi.org/10.3390/rs11172053, 2019.

Duan, Y., Li, X., Zhang, L., Chen, D., Liu, S., and Ji, H.: Mapping national-scale aquaculture ponds based on the Google Earth Engine in the Chinese coastal zone, Aquaculture, 520, 734666, https://doi.org/10.1016/j.aquaculture.2019.734666, 2020.

Efron, B. and Tibshirani, R.: Improvements on cross-validation: The .632+ bootstrap method, J. Am. Stat. Assoc., 92, 548–560, https://doi.org/10.1080/01621459.1997.10474007, 1997.

Eigen, D. and Fergus, R.: Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture, in: 2015 IEEE International Conference on Computer Vision (ICCV), IEEE, Santiago, Chile, 7–13 December 2015, 2650–2658, 2015.

Fan, J., Chu, J., Geng, J., and Zhang, F.: Floating raft aquaculture information automatic extraction based on high resolution SAR images, in: 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), IEEE, Milan, Italy, 26–31 July 2015, 3898–3901, 2015.

Fu, Y., Deng, J., Ye, Z., Gan, M., Wang, K., Wu, J., Yang, W., and Xiao, G.: Coastal aquaculture mapping from very high spatial resolution imagery by combining object-based neighbor features, Sustainability, 11, 637, https://doi.org/10.3390/su11030637, 2019a.

Fu, Y., Ye, Z., Deng, J., Zheng, X., Huang, Y., Yang, W., Wang, Y., and Wang, K.: Finer Resolution Mapping of Marine Aquaculture Areas Using WorldView-2 Imagery and a Hierarchical Cascade Convolutional Neural Network, Remote Sens., 11, 1678, https://doi.org/10.3390/rs11141678, 2019b.

Fu, Y., Liu, K., Shen, Z., Deng, J., Gan, M., Liu, X., Lu, D., and Wang, K.: Mapping Impervious Surfaces in Town–Rural Transition Belts Using China's GF-2 Imagery and Object-Based Deep CNNs, Remote Sens., 11, 280, https://doi.org/10.3390/rs11030280, 2019c.

Fu, Y., Deng, J., Wang H., Comber, A., Yang, W., Wu, W., You, X., Lin, Y., and Wang, K.: A new satellite-derived dataset for marine aquaculture in the China's coastal region, Data set, Zenodo, https://doi.org/10.5281/zenodo.3833225, 2020.

Galil, B. S.: Taking stock: Inventory of alien species in the Mediterranean sea, Biol. Invasions, 11, 359–372, https://doi.org/10.1007/s10530-008-9253-y, 2009.

Gentry, R. R., Froehlich, H. E., Grimm, D., Kareiva, P., Parke, M., Rust, M., Gaines, S. D., and Halpern, B. S.: Mapping the global potential for marine aquaculture, Nat. Ecol. Evol., 1, 1317–1324, https://doi.org/10.1038/s41559-017-0257-9, 2017.

He, K., Zhang, X., Ren, S., and Sun, J.: Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition, IEEE Trans. Pattern Anal. Mach. Intell., 37, 1904–1916, https://doi.org/10.1109/TPAMI.2015.2389824, 2015.

Ioffe, S. and Szegedy, C.: Batch normalization: Accelerating deep network training by reducing internal covariate shift, in: Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015, 448–456, 2015.

Li, H., Kadav, A., Durdanovic, I., Samet, H., and Graf, H. P.: Pruning Filters for Efficient ConvNets, in: International Conference on Learning Representations, Toulon, France, 24–26 April 2017, 1–13, 2017.

Liang, Y., Cheng, X., Zhu, H., Shutes, B., Yan, B., Zhou, Q., and Yu, X.: Historical Evolution of Mariculture in China During Past 40 Years and Its Impacts on Eco-environment, Chinese Geogr. Sci., 28, 363–373, https://doi.org/10.1007/s11769-018- 0940-z, 2018.

Lin, D., Dai, J., Jia, J., He, K., and Sun, J.: ScribbleSup: Scribble-Supervised Convolutional Networks for Semantic Segmentation, in 2016 IEEE Conference on Computer Vision and Pattern Recognition, IEEE, Las Vegas, NV, USA, 27–30 June 2016, 3159–3167, 2016.

Liu, Y., Zhong, Y., Fei, F., and Zhang, L.: Scene semantic classification based on random-scale stretched convolutional neural network for high-spatial resolution remote sensing imagery, in: 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), IEEE, Beijing, China, 763–766, 2016.

Liu, Y., Yu, J., and Han, Y.: Understanding the effective receptive field in semantic image segmentation, Multimed. Tools Appl., 77, 22159–22171, https://doi.org/10.1007/s11042-018-5704-3, 2018.

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation, in: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015, 3431–3440, 2015.

Lu, Y., Li, Q., Du, X., Wang, H., and Liu, J.: A Method of Coastal Aquaculture Area Automatic Extraction with High Spatial Resolution Images, Remote Sens. Technol. Appl., 30, 486–494, https://doi.org/10.11873/j.issn.1004-0323.2015.3.0486, 2015.

Lyons, M. B., Keith, D. A., Phinn, S. R., Mason, T. J., and Elith, J.: A comparison of resampling methods for remote sensing classification and accuracy assessment, Remote Sens. Environ., 208, 145–153, https://doi.org/10.1016/j.rse.2018.02.026, 2018.

Marmanis, D., Schindler, K., Wegner, J. D., Galliani, S., Datcu, M., and Stilla, U.: Classification with an edge: Improving semantic image segmentation with boundary detection, ISPRS J. Photogramm. Remote Sens., 135, 158–172, https://doi.org/10.1016/j.isprsjprs.2017.11.009, 2018.

Noh, H., Hong, S., and Han, B.: Learning deconvolution network for semantic segmentation, in: 2015 IEEE International Conference on Computer Vision (ICCV), IEEE, Santiago, Chile, 7–13 December 2015, 1520–1528, 2015.

Padilla, M., Stehman, S. V., and Chuvieco, E.: Validation of the 2008 MODIS-MCD45 global burned area product using stratified random sampling, Remote Sens. Environ., 144, 187–196, https://doi.org/10.1016/j.rse.2014.01.008, 2014.

Pathak, D., Krahenbuhl, P., and Darrell, T.: Constrained convolutional neural networks for weakly supervised segmentation, in: 2015 IEEE International Conference on Computer Vision, IEEE, Santiago, Chile, 7–13 December 2015, 1796–1804, 2015.

Pinheiro, P. O., Lin, T. Y., Collobert, R., and Dollár, P.: Learning to refine object segments, in: European conference on computer vision, Springer Nature, Amsterdam, The Netherlands, 8–16 October 2016, 75–91, 2016.

Porrello, S., Tomassetti, P., Manzueto, L., Finoia, M. G., Persia, E., Mercatali, I., and Stipa, P.: The influence of marine cages on the sediment chemistry in the Western Mediterranean Sea, Aquaculture, 249, 145–158, https://doi.org/10.1016/j.aquaculture.2005.02.042, 2005.

POWERS, D. M. W.: Evaluation: From precision, recall and f-measure to roc, informedness, markedness and correlation, J. Mach. Learn. Technol., 2, 37–63, 2011.

Ramezan, C. A., Warner, T. A., and Maxwell, A. E.: Evaluation of sampling and cross-validation tuning strategies for regional-scale machine learning classification, Remote Sens., 11, 185, https://doi.org/10.3390/rs11020185, 2019.

Rigos, G. and Katharios, P.: Pathological obstacles of newly-introduced fish species in Mediterranean mariculture: A review, Rev. Fish Biol. Fish., 20, 47–70, https://doi.org/10.1007/s11160-009-9120-7, 2010.

Ronneberger, O., Fischer, P., and Brox, T.: U-Net: Convolutional Networks for Biomedical Image Segmentation, in: Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), Munich, Germany, 5–9 October 2015, 234–241, 2015.

Rubio-Portillo, E., Villamor, A., Fernandez-Gonzalez, V., Antón, J., and Sanchez -Jerez, P.: Exploring changes in bacterial communities to assess the influence of fish farming on marine sediments, Aquaculture, 506, 459–464, https://doi.org/10.1016/j.aquaculture.2019.03.051, 2019.

Shi, T., Xu, Q., Zou, Z., and Shi, Z.: Automatic Raft Labeling for Remote Sensing Images via Dual-Scale Homogeneous Convolutional Neural Network, Remote Sens., 10, 1130, https://doi.org/10.3390/rs10071130, 2018.

Tovar, A., Moreno, C., Mánuel -Vez, M. P., and García -Vargas, M.: Environmental impacts of intensive aquaculture in marine waters, Water Res., 34, 334–342, https://doi.org/10.1016/S0043-1354(99)00102-5, 2000.

Wang, M., Cui, Q., Wang, J., Ming, D., and Lv, G.: Raft cultivation area extraction from high resolution remote sensing imagery by fusing multi-scale region-line primitive association features, ISPRS J. Photogramm. Remote Sens., 123, 104–113, https://doi.org/10.1016/j.isprsjprs.2016.10.008, 2017.

Xiao, L., Haijun, H., Xiguang, Y., and Liwen, Y.: Method to extract raft-cultivation area based on SPOT image, Sci. Surv. Mapp., 38, 41–43, https://doi.org/10.16251/j.cnki.1009-2307.2013.02.033, 2013.

Yim, J., Joo, D., Bae, J., and Kim, J.: A gift from knowledge distillation: Fast optimization, network minimization and transfer learning, in: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Honolulu, HI, USA, 21–26 July 2017, 1063–6919, 2017.

Zhang, L., Zhang, L., and Du, B.: Deep learning for remote sensing data: A technical tutorial on the state of the art, IEEE Geosci. Remote Sens. Mag., 4, 22–40, https://doi.org/10.1109/MGRS.2016.2540798, 2016.

Zhang, X., Zou, J., He, K., and Sun, J.: Accelerating Very Deep Convolutional Networks for Classification and Detection, IEEE Trans. Pattern Anal. Mach. Intell., 38, 1943–1955, https://doi.org/10.1109/TPAMI.2015.2502579, 2016.

Zhao, H., Shi, J., Qi, X., Wang, X., and Jia, J.: Pyramid scene parsing network, in: 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE, Honolulu, HI, USA, 21–26 July 2017, 6230–6239, 2017.

Zhao, W. and Du, S.: Learning multiscale and deep representations for classifying remotely sensed imagery, ISPRS J. Photogramm. Remote Sens., 113, 155–165, https://doi.org/10.1016/j.isprsjprs.2016.01.004, 2016.

Zheng, Y., Wu, J., Wang, A., and Chen, J.: Object-and pixel-based classifications of macroalgae farming area with high spatial resolution imagery, Geocarto Int., 33, 1048–1063, https://doi.org/10.1080/10106049.2017.1333531, 2017.